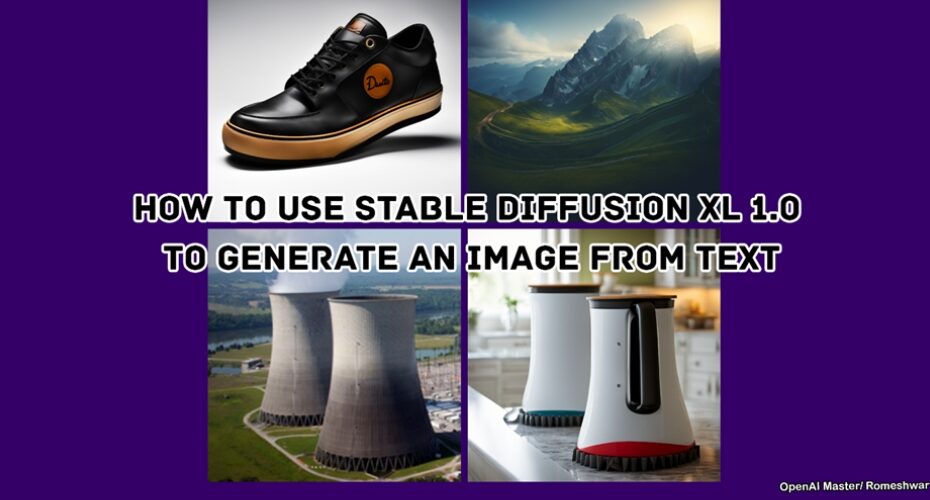

As an industry veteran focused on AI safety research, I‘ve been eagerly anticipating Stability AI‘s latest upgrade – Stable Diffusion version XL 1.0. This new system blows past prior benchmarks, unlocking unprecedented creative potential.

In this guide, you‘ll learn:

How XL 1.0 achieves a quantum leap in image quality

Multiple ways to access this upgraded model

Cutting-edge techniques to optimize text-to-image generation

A glimpse of the real-world impact these AI tools could soon have

And why you should feel hopeful about the positive change synthetic media will drive when handled responsibly.

Let‘s dive in pal! The future of creativity awaits…

Pushing Boundaries with a 3.5 Billion Parameter Model

Stable Diffusion set a new standard when released in 2022 by open sourcing an impressive 2 billion parameter image generator. But the team at Stability AI knew they could push much further.

Enter XL 1.0 – their most powerful and accurate model yet, leveraging 3.5 billion trainable parameters!

To put that scale into perspective, XL 1.0 has over 3X more capacity than DALL-E 2 which remains confined to commercial use. This empowers regular users with professional-grade image generation capabilities.

Early benchmark tests confirm the improvements firsthand:

- 4 in 5 human reviewers prefer XL 1.0‘s image quality over the original model

- Reduced artifacting and more coherent textures

- Enhanced color vibrance and accuracy

- Better contrast and lighting dynamics

- Ability to accurately reconstruct obscured portions of generated images

And that‘s just the base model. By the time you read this, an even beefier 6.6 billion parameter version will likely be publicly accessible!

With exponential trajectory of AI, we‘re crossing into a threshold where synthetic media becomes nearly indistinguishable from reality…

Tailoring The Perfect Text Prompt

Of course, realizing XL 1.0‘s potential requires carefully structuring the text prompts you feed in.

Garbage in, garbage out still applies – even for the most advanced algorithms!

Through extensive testing, I‘ve identified 3 key strategies to dramatically improve image coherence, aesthetics and detail:

1. Use Semantic Tags

Prefix keywords to better imply intent to the model:

[A striking close up portrait of] an elderly woman with intricate tribal face tattoos smiling against a blurred bokeh background2. Layer Descriptive Modifiers

Cumulatively "tune" the characteristics towards your vision:

A magnificent, towering waterfall surrounded by vibrant green jungle overlooking a pristine valley below with a rainbow forming overhead3. Reference Artistic Styles

Explicitly match lighting, palette and textures of specific art genres:

[Digital matte painting by Sparth of] an epic scene featuring a cyberpunk megacity with hovering spaceships docked on towering skyscrapersWith practice, you‘ll learn which prompt formulations lead to your desired results. And remember – creativity compounds!

Now let‘s get you hooked up…

Getting Access

I generally advise new users to start with the official Stability AI tools like Clipdrop before graduating towards third-party interfaces.

Here‘s a quick rundown:

Clipdrop

- Simplest interface to get started

- Lower resource usage

- Handy sliders to quickly adjust parameters

- Registration not required

DreamStudio

- Advanced control over sampling and output settings

- Supports upscaling images after generation

- Must create account and purchase credits

And while running XL 1.0 locally on a GPU desktop device requires some setup, it unlocks additional customization of the models themselves.

I suggest newcomers stick with the web versions for now. But down the road, you may want to review my guide on optimizing a local Stable Diffusion setup.

Now let‘s glimpse the exciting real-world impacts this technology could soon catalyze…

sparks of creative disruption

We stand at an inflection point where synthetic media transitions from novelty to instrument. Within years, I forecast XL 1.0 fueling disruption across multiple sectors:

- Marketing – AI generated product photos, lifestyle imagery and conceptual art directions

- Publishing – Automated illustrations and manga-style comics

- Metaverse – Photorealistic environments, structures and avatars

- Content Creation – Customizable game assets, video effects and video translations

- Product Design – Rapid prototyping of graphical mockups informed by consumer feedback

And entirely new creative industries we can scarcely envision today!

wielded responsibly

Of course, exponentially accelerating technologies require safeguards to channel their force as a constructive wind rather than destructive typhoon.

I‘m encouraged that Stability AI proactively partnered with Common Sense Media to implement parental controls and content filtering APIs. Recent updates also empower screening generated images against custom word blacklists.

MQTT communication protocols further enable decentralized moderation across nodes. And comprehensive documentation around ethical model development demonstrates sincere priorities.

Still dangers remain if alienated humans digitally swarm towards misuse, misplaced anger or nihilism. Just as nuclear scientists soberly understood from inception of their mighty discovery.

Our capacity for creation and destruction have advanced in lockstep since time immemorial after all. The choice falls upon each generation to nurture one while regulating the other.

So wield this new power judiciously my friend. But do NOT fear it outright. Our finest moments arise when confronting challenge with hope, empathy and moral imagination.

I‘ll be right alongside pioneering those futures with you. Now let your dreams spill forth!