In our increasingly digital world, computers have become an indispensable part of daily life. From the smartphones we carry in our pockets to the supercomputers modeling climate change, these remarkable machines have fundamentally altered how we work, communicate, and perceive reality. Join us on an enlightening journey through the fascinating realm of computer technology as we explore its rich history, inner workings, and tantalizing future potential.

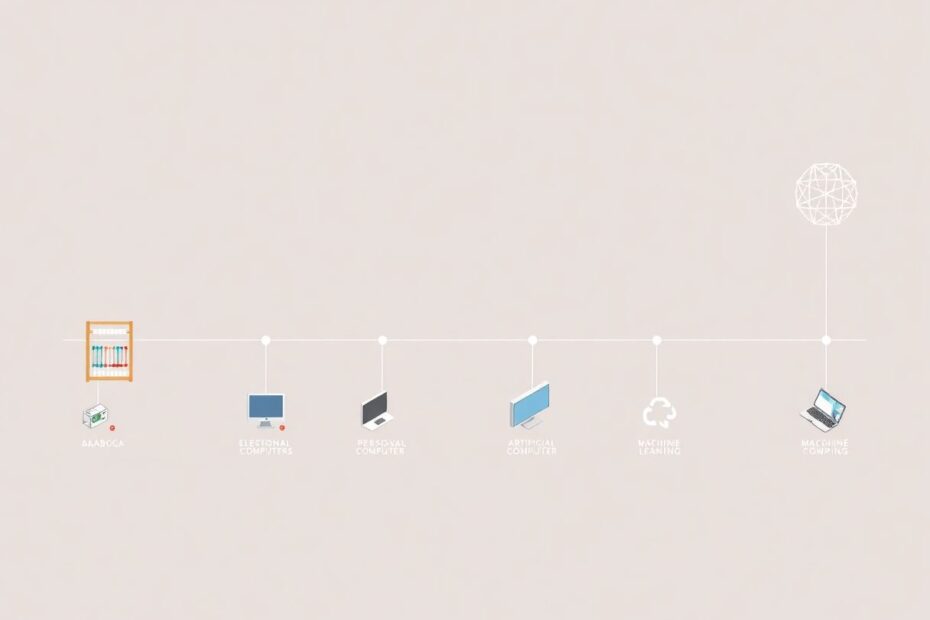

The Birth of Computing: From Mechanical to Electronic

Early Mechanical Computers

The story of computing stretches back far beyond our modern digital age. The abacus, invented around 2400 BC in ancient Babylon, stands as one of humanity's earliest known computing devices. This simple yet effective tool allowed for rapid calculations using a system of beads or stones moved along rods. For centuries, the abacus remained the pinnacle of calculation technology across many civilizations.

Fast forward to the 17th century, and we witness the emergence of more sophisticated mechanical calculators. Blaise Pascal's Pascaline, created in 1642, represented a significant leap forward. This gear-driven device could perform addition and subtraction automatically, a remarkable feat for its time. Pascal's invention laid the groundwork for future mechanical computers and inspired other luminaries to push the boundaries of calculation.

One such visionary was Gottfried Wilhelm Leibniz, who famously declared, "It is unworthy of excellent men to lose hours like slaves in the labor of calculation which could safely be relegated to anyone else if machines were used." Leibniz's words in the late 17th century presciently foreshadowed the computer revolution to come. His mechanical calculator, known as the Stepped Reckoner, not only improved upon Pascal's design but also laid the crucial groundwork for binary arithmetic – the very foundation of modern computing.

The Transition to Electronic Computing

While mechanical computers continued to evolve, the real watershed moment in computing history arrived in the mid-20th century with the development of electronic computers. The ENIAC (Electronic Numerical Integrator and Computer), completed in 1945 at the University of Pennsylvania, is widely regarded as the first general-purpose electronic computer. This behemoth filled a 20-foot by 40-foot room and utilized an astounding 18,000 vacuum tubes to perform its calculations!

The ENIAC marked the beginning of a rapid succession of innovations in electronic computing. Key milestones during this transformative period include:

- 1937: Alan Turing introduces his groundbreaking concept of a universal machine, laying the theoretical foundation for modern computers.

- 1945: ENIAC becomes operational, ushering in the era of electronic computing.

- 1951: The UNIVAC I (Universal Automatic Computer I) becomes the first commercially available computer, finding applications in business and government.

- 1953: IBM enters the computer market, setting the stage for its future dominance in the industry.

These early electronic computers, while primitive by today's standards, represented a quantum leap in calculation speed and versatility compared to their mechanical predecessors. They paved the way for the digital revolution that would reshape every aspect of human society in the decades to come.

The Anatomy of a Modern Computer

To truly appreciate the marvel of modern computing, it's essential to understand the core components that make up these powerful machines. Let's take a closer look at the anatomy of a typical computer:

Input Devices: These components allow us to communicate with the computer, translating our actions into data the machine can process. Common input devices include keyboards, mice, touchscreens, and microphones. More specialized input devices might include game controllers, drawing tablets, or even brain-computer interfaces.

Output Devices: These components present information from the computer back to us in a form we can understand. The most common output devices are monitors, speakers, and printers. However, this category also includes more advanced technologies like virtual reality headsets or haptic feedback systems.

Processing Unit: Often referred to as the "brain" of the computer, the Central Processing Unit (CPU) performs the majority of calculations and logical operations. Modern CPUs can execute billions of instructions per second. In addition to the CPU, many computers also utilize Graphics Processing Units (GPUs) for specialized tasks like rendering complex 3D graphics or performing parallel computations for machine learning algorithms.

Memory and Storage: Computers rely on different types of memory and storage to function effectively. Random Access Memory (RAM) provides fast, short-term storage for data and instructions that the CPU needs immediate access to. For long-term storage, computers use hard disk drives (HDDs) or solid-state drives (SSDs). SSDs offer faster access times and improved reliability compared to traditional HDDs, though often at a higher cost per gigabyte of storage.

Motherboard: This main circuit board serves as the central nervous system of the computer, connecting all other components and allowing them to communicate with each other. The motherboard contains crucial elements like the system bus, which transfers data between components, and various ports for connecting peripheral devices.

Understanding these core components provides a foundation for appreciating the incredible complexity and capability of modern computers. From smartphones to supercomputers, these basic elements work in harmony to perform the myriad tasks we've come to rely on in our digital age.

How Computers Process Information

At its most fundamental level, a computer processes information using a binary system – a series of 0s and 1s. This simplicity belies the incredible complexity of operations that computers can perform. But how exactly does this binary foundation translate into the sophisticated applications and processes we see in action every day?

The process can be broken down into four main stages:

- Input: Information is entered into the computer through various input devices or data streams.

- Storage: The input data is stored in memory, either temporarily in RAM or more permanently on a storage device.

- Processing: The CPU performs calculations and operations on the data according to the instructions provided by software.

- Output: Results are displayed, stored, or transmitted to other devices or systems.

To illustrate this process, consider the analogy of baking a cake. The recipe represents the input – the instructions and ingredients needed. Your kitchen utensils and appliances serve as the processing units, transforming the raw ingredients. The finished cake is the output of this process. A computer follows a similar sequence, but at speeds and scales that are difficult for the human mind to comprehend.

For example, when you type a search query into a web browser, your keystrokes serve as the input. This data is briefly stored in memory before being processed by the CPU, which executes the browser software's instructions to formulate and send a request to a search engine. The search engine's servers process this request and send back results, which your computer then outputs to your screen – all in a fraction of a second.

This simple example barely scratches the surface of modern computing capabilities. Today's computers can simultaneously process millions of instructions, rendering complex 3D graphics, analyzing vast datasets, and even simulating quantum physics – all while you check your email or stream a video.

The true power of computers lies not just in their ability to process information quickly, but in their versatility. The same basic hardware can be programmed to perform an almost limitless variety of tasks, from controlling industrial robots to composing music. This flexibility, combined with ever-increasing processing power, continues to open new frontiers in what's possible with computer technology.

The Rise of Personal Computing

The 1970s and 1980s witnessed a paradigm shift in computing with the birth of personal computing. This era saw computers transition from room-sized machines accessible only to large organizations to devices that could fit on a desk in homes and small offices. Companies like Apple, Commodore, and IBM were at the forefront of this revolution, bringing the power of computing to the masses.

Key milestones in the personal computing revolution include:

- 1976: Steve Jobs and Steve Wozniak release the Apple I, one of the first personal computers sold as a fully assembled circuit board.

- 1981: IBM introduces the IBM PC, setting a standard that would dominate the industry for decades.

- 1984: Apple launches the Macintosh, featuring a groundbreaking graphical user interface that made computers more accessible to non-technical users.

These machines fundamentally changed how people worked and played. Word processing software replaced typewriters, allowing for easy editing and formatting of documents. Spreadsheet programs like VisiCalc and later Excel revolutionized financial planning and data analysis. Computer games opened up new realms of entertainment, from text adventures to early graphical games.

The personal computing revolution wasn't just about hardware and software; it represented a democratization of computing power. Previously, access to computers was limited to large corporations, universities, and government agencies. Now, individuals could harness this technology for their own purposes, whether for business, education, or personal projects.

This shift had profound implications for society. It laid the groundwork for the information age, where data and computational power became increasingly central to everyday life. The skills needed to use computers became valuable in the job market, leading to changes in education and workforce training.

As personal computers became more powerful and user-friendly, they also became more interconnected, setting the stage for the next great revolution in computing: the Internet.

The Internet: Connecting the World

The development of the Internet in the late 20th century marked another quantum leap in computing technology, fundamentally changing how we communicate, access information, and conduct business. What began as a project to connect research institutions has grown into a global network that touches nearly every aspect of modern life.

How the Internet Works

At its core, the Internet is a vast network of interconnected computers and servers, communicating using standardized protocols. When you access a website, a complex series of events occurs in mere milliseconds:

- Your computer sends a request to a Domain Name System (DNS) server to translate the human-readable website address into an IP address.

- Once the IP address is obtained, your computer sends a request to the server hosting the website.

- The server processes the request and sends back the requested information, typically in the form of HTML, CSS, and JavaScript files.

- Your web browser interprets these files and displays the website on your screen.

This process happens so quickly and seamlessly that we rarely consider the incredible infrastructure that makes it possible. From undersea cables spanning oceans to satellites in orbit, the physical layer of the Internet is a marvel of modern engineering.

The World Wide Web

While the Internet provides the infrastructure, the World Wide Web, invented by Tim Berners-Lee in 1989, made this technology accessible and useful to the general public. The Web relies on several key technologies:

- HTML (Hypertext Markup Language) for structuring content

- URLs (Uniform Resource Locators) for addressing web pages

- HTTP (Hypertext Transfer Protocol) for transmitting data between clients and servers

These technologies, along with advancements in web browsers, transformed the Internet from a tool primarily used by academics and governments into a platform for global commerce, communication, and information sharing.

The impact of the Web on society has been profound. It has revolutionized how we access information, with encyclopedias and libraries now at our fingertips. E-commerce has transformed retail, allowing small businesses to reach global markets. Social media platforms have changed how we connect with others and share our lives. Online education has made learning more accessible than ever before.

As the Web has evolved, new technologies like cloud computing, big data analytics, and the Internet of Things (IoT) have emerged, further expanding the possibilities of what can be achieved through interconnected computing systems.

Mobile Computing: Computers in Our Pockets

The 21st century has seen the meteoric rise of mobile computing, putting powerful computers in our pockets and fundamentally altering how we interact with technology. Smartphones and tablets have become ubiquitous, serving as our constant companions and gateways to the digital world.

The mobile revolution can be traced to several key events:

- 2007: Apple introduces the iPhone, setting a new standard for smartphones with its touchscreen interface and app ecosystem.

- 2008: The first Android phone is released, offering an open-source alternative to Apple's iOS.

- 2010: Apple launches the iPad, popularizing the tablet form factor and creating a new category of mobile devices.

These devices represent a convergence of multiple technologies:

- High-resolution touchscreens for intuitive interaction

- GPS for location services and navigation

- Advanced cameras for photography and augmented reality applications

- Powerful processors capable of running sophisticated apps and games

- Long-lasting batteries to support extended use

Mobile devices have had a transformative effect on numerous industries. They've revolutionized photography, with smartphones now the most common cameras in the world. They've changed how we consume media, with streaming services optimized for mobile viewing. Mobile banking and payment systems have altered financial transactions, particularly in developing countries where traditional banking infrastructure is limited.

The app economy, spawned by mobile devices, has created entirely new business models and job categories. From ride-sharing services to mobile games, apps have become a central part of how we work, play, and manage our daily lives.

As mobile devices continue to evolve, we're seeing the lines blur between phones, tablets, and traditional computers. Foldable displays, improved stylus support, and increasingly powerful processors are expanding what's possible with mobile computing. The integration of artificial intelligence and machine learning directly on mobile devices is opening up new frontiers in personalized computing experiences.

Cloud Computing: The Virtual Data Center

Cloud computing has emerged as a transformative force in the world of information technology, fundamentally changing how businesses and individuals store, access, and process data. Instead of relying solely on local hardware, cloud computing allows users to harness the power of remote servers accessed via the Internet.

The benefits of cloud computing are numerous:

- Scalability: Cloud services can easily scale up or down based on demand, allowing businesses to quickly adjust their computing resources as needed.

- Accessibility: Data and applications stored in the cloud can be accessed from anywhere with an internet connection, facilitating remote work and global collaboration.

- Cost-effectiveness: By using cloud services, organizations can reduce their investment in physical hardware and maintenance, paying only for the resources they actually use.

- Reliability: Cloud providers typically offer robust backup and disaster recovery solutions, ensuring data safety and business continuity.

Several major players dominate the cloud computing landscape:

- Amazon Web Services (AWS): The largest cloud provider, offering a vast array of services from basic storage to advanced machine learning tools.

- Microsoft Azure: Leveraging Microsoft's enterprise software expertise to provide a comprehensive cloud platform.

- Google Cloud Platform: Known for its strength in data analytics and machine learning capabilities.

Cloud computing has enabled new business models and technological advancements. Software-as-a-Service (SaaS) applications like Salesforce and Google Workspace have changed how businesses operate. Platform-as-a-Service (PaaS) offerings allow developers to build and deploy applications without managing the underlying infrastructure. Infrastructure-as-a-Service (IaaS) provides flexible, scalable computing resources for organizations of all sizes.

The impact of cloud computing extends beyond business applications. Consumer services like iCloud, Google Drive, and Dropbox rely on cloud infrastructure to provide seamless file storage and synchronization across devices. Streaming services like Netflix and Spotify use cloud computing to deliver content to millions of users simultaneously.

As cloud computing continues to evolve, we're seeing increased focus on edge computing, which brings computation and data storage closer to the location where it's needed. This approach can reduce latency and bandwidth use, crucial for applications like autonomous vehicles and industrial IoT.

The rise of cloud computing represents a shift in how we think about IT infrastructure, moving from a model of owned assets to one of flexible, on-demand services. This transition is ongoing, and its full implications for business, privacy, and global information flow are still unfolding.

Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) represent the cutting edge of computer technology, aiming to create intelligent machines that can learn, adapt, and solve complex problems. These fields are not just theoretical – they're already having a profound impact on various aspects of our lives, from the recommendations we see on streaming services to the voice assistants in our homes.

How AI Works

At its core, AI systems use algorithms to:

- Collect and process large amounts of data

- Analyze patterns and relationships within this data

- Make predictions or decisions based on this analysis

Machine Learning, a subset of AI, focuses on creating systems that can improve their performance on a task through experience. Instead of being explicitly programmed for every possible scenario, ML systems learn from data, becoming more accurate over time.

There are several types of machine learning:

- Supervised Learning: The algorithm is trained on a labeled dataset, learning to map inputs to outputs.

- Unsupervised Learning: The algorithm finds patterns in unlabeled data.

- Reinforcement Learning: The algorithm learns through interaction with an environment, receiving rewards or penalties for its actions.

Deep Learning, a subset of machine learning based on artificial neural networks, has driven many recent breakthroughs in AI. These systems, inspired by the human brain, can process vast amounts of data and perform tasks like image and speech recognition with remarkable accuracy.

Real-world applications of AI and ML are increasingly common:

- Virtual assistants like Siri, Alexa, and Google Assistant use natural language processing to understand and respond to user queries.

- Recommendation systems on platforms like Netflix and Amazon use collaborative filtering and deep learning to suggest content or products.

- Self-driving cars use computer vision and reinforcement learning to navigate complex environments.

- Medical diagnosis tools use machine learning to analyze medical images and patient data, assisting doctors in detecting diseases earlier and more accurately.

The potential of AI extends far beyond these current applications. Researchers are exploring AI's potential to solve complex problems in fields like climate change mitigation, drug discovery, and renewable energy optimization.

However, the rise of AI also presents challenges and ethical considerations. Issues of bias in AI systems, the potential for job displacement, and questions of privacy and data use are at the forefront of discussions about the future of this technology.

As AI continues to advance, it's likely to become an even more integral part of our computing landscape, working in tandem with human intelligence to solve complex problems and push the boundaries of what's possible.

The Future of Computing

As we look to the horizon of computing technology, several exciting frontiers promise to revolutionize how we process information and interact with the digital world. Three areas, in particular, stand out