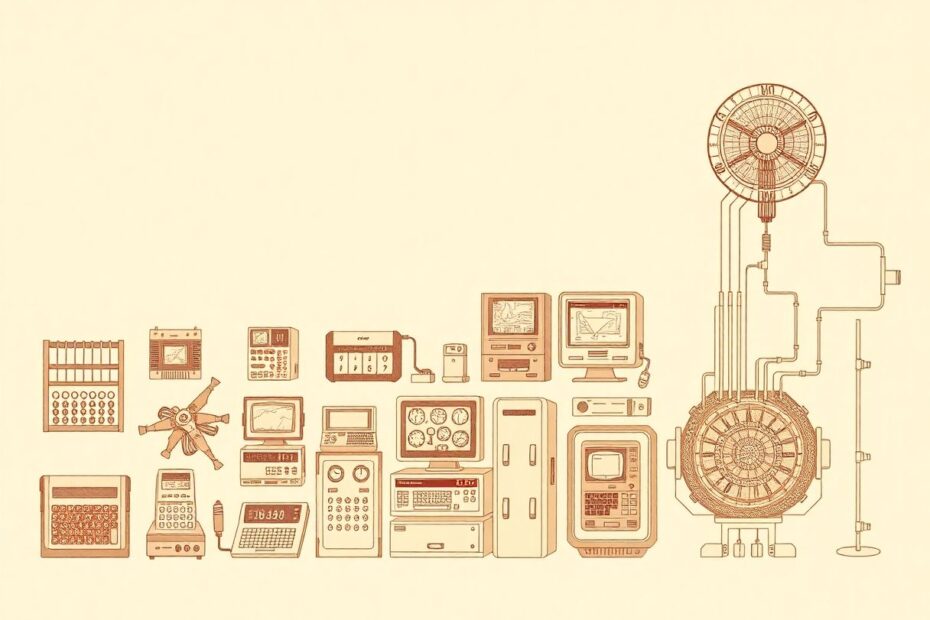

In the tapestry of human innovation, few threads have been as transformative as the development of computers. From the ancient abacus to today's quantum machines, the evolution of computing has been a testament to human ingenuity and our insatiable appetite for progress. This journey, spanning millennia, has not only revolutionized how we process information but has fundamentally altered the fabric of our society.

The Ancient Roots of Calculation

The story of computing begins long before the digital age, in the dusty marketplaces of ancient civilizations. Around 500 BCE, merchants in the Middle East developed the abacus, a simple yet ingenious device consisting of beads sliding on wires. This humble tool allowed for rapid addition and subtraction, embodying the core principle behind all future computers: the ability to perform calculations more swiftly than the human mind alone.

For over two millennia, the abacus reigned supreme in the realm of calculation. Its enduring legacy is a testament to its effectiveness, with variations still in use today in parts of Asia. The abacus laid the groundwork for future mechanical calculators, demonstrating that physical tools could augment human cognitive abilities.

The Dawn of Mechanical Computing

The 17th century marked a pivotal moment in the history of computing with the emergence of more sophisticated mechanical calculators. In 1642, the French mathematical prodigy Blaise Pascal invented the Pascaline, widely regarded as the first true mechanical calculator. This marvel of gears and cogs could perform addition and subtraction with decimal numbers, representing a quantum leap in calculation technology.

Just a few decades later, in 1671, the German polymath Gottfried Wilhelm Leibniz took mechanical calculation a step further. His Step Reckoner, or Stepped Reckoner, could not only add and subtract but also multiply and divide. Perhaps even more significantly, Leibniz developed the concept of binary code – a system of representing numbers using only 0s and 1s. This seemingly simple idea would prove to be the foundation of all digital computing more than two centuries later.

The Analytical Engine: A Vision Ahead of Its Time

The 19th century saw the conception of a machine that was far ahead of its time – Charles Babbage's Analytical Engine. Designed in the 1830s, this mechanical marvel was never fully realized during Babbage's lifetime, but its blueprints contained the seeds of modern computing.

The Analytical Engine incorporated several elements that we now recognize as fundamental to computer architecture:

- An input system using punched cards, inspired by the Jacquard loom used in textile manufacturing.

- A memory unit (which Babbage called the "store") to hold numbers for calculations.

- A processor (the "mill") to perform arithmetic operations.

- An output system to print results.

Perhaps most remarkably, Babbage's collaborator Ada Lovelace wrote what is widely considered to be the first computer program for the Analytical Engine. Her work on an algorithm to calculate Bernoulli numbers earned her the title of the world's first computer programmer, a testament to her visionary understanding of the machine's potential.

The Electric Revolution

The late 19th and early 20th centuries saw the dawn of electric calculators, marking a significant leap forward in computing power. The invention of electric motors allowed for faster, more powerful calculating machines that could handle increasingly complex tasks.

One notable example was Herman Hollerith's tabulating machine, developed in 1890 to assist with the U.S. census. Hollerith's machine used punched cards to rapidly compile and analyze data, completing the census in just six weeks – a task that had previously taken years. This success led to the formation of the company that would eventually become IBM, a titan in the computer industry.

In the 1930s, Vannevar Bush developed the Differential Analyzer at MIT. This analog computer used mechanical components to solve differential equations, demonstrating the potential for machines to tackle complex mathematical problems beyond simple arithmetic.

The Birth of Electronic Computing

World War II served as a catalyst for rapid advancements in computing technology, driven by the urgent need for faster calculation methods in areas such as ballistics and code-breaking. This period saw the emergence of the first true electronic computers.

In 1943, the British unveiled Colossus, the world's first programmable electronic computer. Designed to crack Nazi encryption, Colossus used vacuum tubes to perform boolean logic operations, processing data at unprecedented speeds. Its existence was kept secret for decades after the war, concealing its pivotal role in the Allied victory.

Across the Atlantic, another milestone in computing was taking shape. In 1946, the University of Pennsylvania completed ENIAC (Electronic Numerical Integrator and Computer), the first general-purpose electronic computer. ENIAC was a behemoth, weighing 30 tons and occupying a large room. It used nearly 18,000 vacuum tubes and could perform around 5,000 additions per second – a staggering improvement over its mechanical predecessors.

The Transistor: A Tiny Revolution

The next paradigm shift in computing came with the invention of the transistor in 1947 by John Bardeen, Walter Brattain, and William Shockley at Bell Labs. This tiny semiconductor device could act as a switch or amplifier, replacing the bulky and unreliable vacuum tubes used in earlier computers.

Transistors allowed computers to become smaller, faster, more reliable, and more energy-efficient. The impact was immediately apparent in the computers of the 1950s. The IBM 701, introduced in 1952, was IBM's first commercially successful transistor-based computer, marking the company's entry into large-scale computing. In 1954, the TRADIC (TRAnsistorized DIgital Computer) became the first fully transistorized computer, built for the U.S. Air Force.

The Integrated Circuit Era

The pace of innovation accelerated further with the invention of the integrated circuit (IC) in 1958. Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor independently developed methods to fabricate multiple transistors on a single chip, dramatically increasing computing power while reducing size and cost.

The IC ushered in a new generation of smaller, more powerful computers. The 1960s saw the rise of "minicomputers" like the popular DEC PDP-8, which brought computing power to smaller businesses and research institutions. These machines, while still substantial by today's standards, were a fraction of the size and cost of earlier mainframes.

The Microprocessor Revolution

The next quantum leap came in 1971 with the development of the first microprocessor – the Intel 4004. Created by Intel engineer Ted Hoff, this "computer on a chip" contained 2,300 transistors and could perform up to 60,000 operations per second. The 4004 opened the door to affordable, compact computing devices, setting the stage for the personal computer revolution.

The impact of the microprocessor was soon felt across the computing landscape. Early personal computers like the Altair 8800 (1975) and the Apple II (1977) brought computing into homes and small businesses. The IBM PC, introduced in 1981, set the standard for business computing and spawned a vast ecosystem of compatible hardware and software.

The Graphical User Interface and the Rise of Personal Computing

The 1980s saw a shift towards more user-friendly computing experiences. Apple's Macintosh, introduced in 1984, popularized the graphical user interface (GUI) and the mouse, making computers accessible to a broader audience. Microsoft's Windows operating system, first released in 1985, brought similar capabilities to IBM-compatible PCs.

This era also saw rapid advances in processor speed and memory capacity. Intel's 80386 processor, released in 1985, could handle 32-bit processing and multitasking, paving the way for more sophisticated software applications.

The Internet: Connecting the World

While personal computers were becoming ubiquitous, another revolution was brewing – the rise of computer networking. The foundations were laid in 1969 with ARPANET, the first wide-area computer network and predecessor to the internet. The development of the TCP/IP protocol in 1983 enabled different computer networks to communicate, creating the backbone of the modern internet.

The World Wide Web, invented by Tim Berners-Lee in 1989, made the internet accessible to the masses. The launch of the Mosaic web browser in 1993 marked the beginning of the internet's exponential growth, transforming how we access information, communicate, and conduct business.

Mobile and Cloud Computing: Computing Everywhere

The 21st century has seen computing become even more personal and pervasive. Smartphones, epitomized by the iPhone's launch in 2007, put powerful computers in our pockets, blending communication, computing, and entertainment in unprecedented ways.

Simultaneously, cloud computing has emerged as a paradigm shift in how we access and use computing resources. Services like Amazon Web Services (AWS), launched in 2006, allow individuals and businesses to tap into vast computing power over the internet, democratizing access to advanced computational resources.

The Internet of Things (IoT) represents the next frontier, connecting everyday objects to the digital world. From smart home devices to industrial sensors, IoT is creating a world where computing is embedded in the fabric of our daily lives.

Artificial Intelligence and Machine Learning: The Next Frontier

As traditional silicon-based processors approach their physical limits, researchers are exploring new frontiers in computing. Artificial Intelligence (AI) and Machine Learning (ML) represent some of the most exciting developments in recent years.

The concept of AI has been around since the 1950s, but recent advances in processing power and algorithm design have led to remarkable breakthroughs. Deep learning systems, inspired by the structure of the human brain, have achieved human-level performance in tasks ranging from image recognition to natural language processing.

Milestones like IBM's Deep Blue defeating world chess champion Garry Kasparov in 1997, and Google's AlphaGo beating the world's top Go player in 2016, have captured the public imagination. More recently, large language models like GPT-3 have demonstrated impressive natural language abilities, raising both excitement and concerns about the future of AI.

Quantum Computing: Harnessing the Power of Quantum Mechanics

On the hardware front, quantum computing represents a paradigm shift in how we approach computation. Unlike classical computers that use bits (0s and 1s), quantum computers use quantum bits or qubits, which can exist in multiple states simultaneously thanks to the principles of quantum mechanics.

While still in its infancy, quantum computing has the potential to solve certain types of problems exponentially faster than classical computers. This could have profound implications for fields like cryptography, drug discovery, and complex system simulation.

Companies like IBM, Google, and D-Wave are at the forefront of quantum computing research, with Google claiming to have achieved "quantum supremacy" in 2019 by performing a calculation that would be practically impossible for a classical computer.

The Societal Impact of Computing

The history of computing is not just a tale of technological advancement; it's a story of how technology has reshaped human society. Computers have revolutionized every aspect of our lives:

- Communication: Email, instant messaging, and social media have transformed how we connect with one another.

- Commerce: E-commerce and digital payments have created new business models and changed consumer behavior.

- Education: Online learning platforms and educational software have democratized access to knowledge.

- Entertainment: Video games, streaming media, and digital art have created new forms of creative expression.

- Work: Automation has changed the nature of work, eliminating some jobs while creating entirely new career paths.

- Science and Medicine: Computers have accelerated scientific discovery and improved medical diagnosis and treatment.

At the same time, the digital revolution has brought new challenges. Issues of privacy, data security, and the digital divide have become pressing concerns in our increasingly connected world.

Looking to the Future

As we stand on the cusp of new breakthroughs in quantum computing, artificial intelligence, and beyond, it's clear that the story of computing is far from over. Emerging technologies like neuromorphic computing (which mimics the structure of the human brain) and optical computing (using light instead of electricity to process information) promise to push the boundaries of what's possible.

The history of computing is a testament to human ingenuity and our endless quest for knowledge and progress. From the humble abacus to the quantum computers of tomorrow, each innovation has built upon the last, creating machines of ever-increasing power and versatility.

As we look to the future, one thing is certain: the next chapter in the remarkable journey of computing will be just as revolutionary as the last, continuing to transform our world in ways we can scarcely imagine.