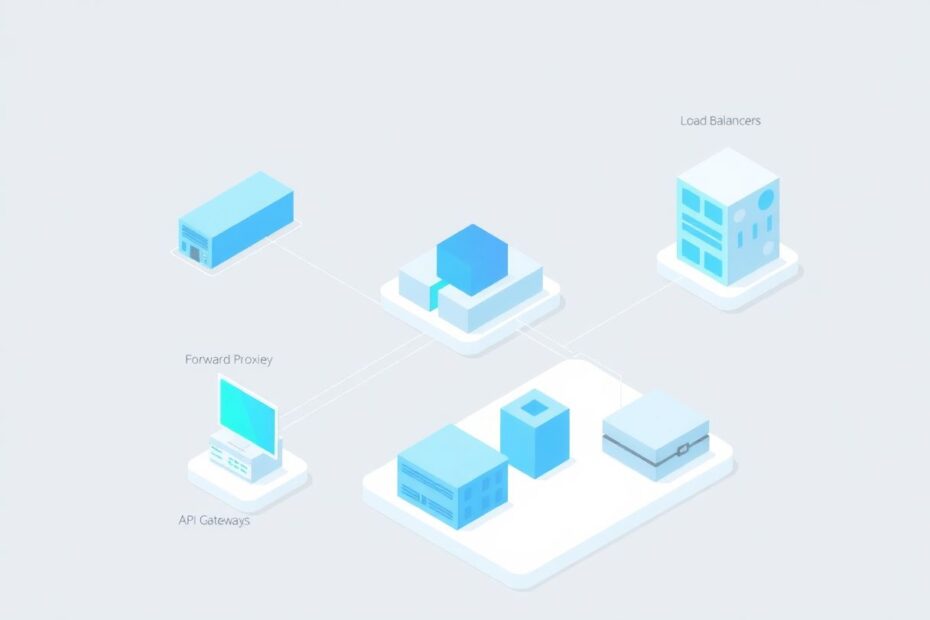

In today's rapidly evolving digital landscape, understanding the intricacies of network system design is paramount for anyone involved in building or maintaining modern web applications. This comprehensive guide will explore four crucial components that form the backbone of efficient and scalable network architectures: load balancers, reverse proxies, forward proxies, and API gateways. By the end of this article, you'll have gained a thorough understanding of how these technologies work in harmony to create robust, high-performance systems capable of handling the demands of contemporary web applications.

The Cornerstone of Modern Web Architecture

Before delving into the specifics of each component, it's essential to grasp why these technologies are indispensable in modern web architecture. As web applications grow in complexity and user base, they face numerous challenges, including increasing traffic loads, the need for high availability and fault tolerance, security concerns, performance optimization, and scalability requirements. To address these challenges, system designers employ various tools and techniques, with load balancers, reverse proxies, forward proxies, and API gateways standing out as some of the most powerful and versatile solutions available.

Load Balancers: The Maestros of Traffic Distribution

Unveiling the Power of Load Balancers

Load balancers serve as the traffic directors of the internet, acting as devices or software applications that distribute incoming network traffic across multiple servers. Imagine a skilled traffic controller at a bustling intersection, efficiently directing vehicles (requests) to different roads (servers) to prevent congestion and ensure a smooth flow of traffic.

The Inner Workings of Load Balancers

When a client sends a request to a web application, the load balancer intercepts this request and forwards it to one of several backend servers. This process utilizes various algorithms to determine which server should handle each request, with the ultimate goal of optimizing resource utilization and minimizing response times.

Exploring Load Balancer Types

Load balancers come in two primary flavors:

Hardware Load Balancers: These are physical devices optimized for high-speed processing, often used in enterprise environments where performance is critical.

Software Load Balancers: These flexible solutions can be deployed on virtual machines or containers, offering scalability and cost-effectiveness for many organizations.

The Art of Load Balancing Algorithms

Load balancers employ different algorithms to distribute traffic effectively:

Round Robin: This simple yet effective method distributes requests sequentially across the server pool, ensuring an even distribution of traffic.

Least Connections: This algorithm sends new requests to the server with the fewest active connections, helping to prevent any single server from becoming overwhelmed.

IP Hash: By using the client's IP address to determine which server receives the request, this method ensures that a client consistently connects to the same server, which can be beneficial for maintaining session state.

Weighted Round Robin: This advanced technique assigns different weights to servers based on their capacity, allowing more powerful servers to handle a larger share of the traffic.

The Myriad Benefits of Load Balancers

Implementing load balancers offers numerous advantages:

- Improved application availability by distributing traffic across multiple servers

- Enhanced scalability, allowing for easy addition or removal of servers without downtime

- Better performance under high traffic conditions through efficient resource utilization

- Simplified maintenance, as servers can be taken offline for updates without impacting the overall system

Real-World Application: E-commerce Platform During a Major Sale

Consider a popular e-commerce platform during a highly anticipated sale event. Without a load balancer, a sudden surge in traffic could overwhelm a single server, leading to slow response times or even system crashes. With a load balancer in place, incoming requests are intelligently distributed across multiple servers, ensuring the platform remains responsive and available to all users, even during peak traffic periods.

Reverse Proxies: The Unsung Heroes of Backend Protection

Decoding the Reverse Proxy

A reverse proxy acts as a server that sits between client devices and web servers, forwarding client requests to the appropriate backend server. Unlike a forward proxy, which serves the client, a reverse proxy serves the web server, acting as a crucial intermediary in the request-response cycle.

The Mechanics of Reverse Proxies

When a client sends a request to a web application, the reverse proxy intercepts this request, forwards it to the appropriate backend server, receives the response, and sends it back to the client. To the client, the reverse proxy appears as the origin server, providing a layer of abstraction and security for the backend infrastructure.

Key Features that Make Reverse Proxies Indispensable

Load Balancing: Many reverse proxies can also function as load balancers, distributing traffic across multiple backend servers.

Caching: By storing frequently requested content, reverse proxies can significantly reduce server load and improve response times.

SSL Termination: Handling HTTPS encryption/decryption at the proxy level offloads this computationally intensive task from backend servers.

Compression: Compressing server responses reduces bandwidth usage and improves overall system performance.

Security: Acting as a shield for backend servers, reverse proxies protect against various types of attacks, including DDoS attempts.

The Multifaceted Benefits of Reverse Proxies

Implementing reverse proxies offers several advantages:

- Enhanced security by hiding backend server details from potential attackers

- Improved performance through intelligent caching and compression mechanisms

- Simplified SSL management, centralizing certificate handling and reducing the burden on backend servers

- Ability to serve static content directly, reducing the load on application servers

Real-World Scenario: News Website During Breaking Events

Imagine a popular news website that experiences significant traffic spikes during major events. A reverse proxy can cache frequently accessed articles, serving them directly to users without hitting the backend servers. This approach not only improves response times but also reduces the load on the application servers, allowing them to focus on generating new content and handling dynamic requests. During breaking news situations, this caching mechanism can be the difference between a responsive, informative platform and a sluggish, overloaded system.

Forward Proxies: The Client's Trusted Intermediaries

Unraveling the Forward Proxy

A forward proxy, often simply referred to as a proxy, is a server that sits between client devices and the internet. It acts on behalf of clients, forwarding their requests to web servers and returning the responses, serving as a valuable tool for privacy, security, and access control.

The Inner Workings of Forward Proxies

When a client wants to access a web resource, it sends the request to the forward proxy instead of directly to the web server. The proxy then makes the request on behalf of the client, receives the response, and forwards it back to the client. This process allows for various benefits and control mechanisms to be implemented.

Essential Features of Forward Proxies

Anonymity: By hiding client IP addresses from web servers, forward proxies enhance user privacy and security.

Access Control: Organizations can use forward proxies to restrict access to certain websites or content, enforcing acceptable use policies.

Caching: Storing frequently accessed content can improve performance and reduce bandwidth consumption.

Logging: Recording client activities enables monitoring for security, compliance, and analytics purposes.

The Compelling Benefits of Forward Proxies

Implementing forward proxies offers several advantages:

- Enhanced privacy and security for clients by masking their true IP addresses

- Ability to bypass geo-restrictions, allowing access to content that might otherwise be unavailable

- Improved performance through intelligent caching mechanisms

- Centralized control over internet access within an organization, facilitating policy enforcement and monitoring

Real-World Application: Corporate Internet Usage Management

In a corporate environment, a forward proxy can be an invaluable tool for managing and monitoring employee internet usage. It can be configured to block access to non-work-related websites, cache frequently accessed resources to save bandwidth, and provide detailed logs of internet activity for security and compliance purposes. This level of control not only helps maintain productivity but also protects the organization from potential security threats and legal liabilities associated with inappropriate internet use.

API Gateways: The Conductors of Microservices Symphony

Demystifying the API Gateway

An API gateway serves as a server that acts as an API front-end, receiving API requests, enforcing throttling and security policies, passing requests to the back-end service, and then passing the response back to the requester. In the world of microservices, API gateways play a crucial role in managing the complexity of service interactions.

The Orchestration of API Gateways

When a client makes a request to an API, the gateway intercepts this request. It can then perform various actions such as authentication, rate limiting, or request transformation before forwarding the request to the appropriate microservice. Once the microservice responds, the gateway can again transform the response before sending it back to the client, ensuring a seamless and secure interaction.

Pivotal Features of API Gateways

Request Routing: API gateways excel at directing requests to the appropriate microservice, abstracting the complexity of the backend architecture from clients.

Authentication and Authorization: Centralizing these security measures at the gateway level ensures consistent and robust access control across all services.

Rate Limiting: By controlling the number of requests a client can make, API gateways protect backend services from overload and potential abuse.

Request/Response Transformation: Modifying requests or responses as needed allows for version management and data format standardization.

Monitoring and Analytics: Comprehensive tracking of API usage and performance provides valuable insights for optimization and troubleshooting.

The Transformative Benefits of API Gateways

Implementing API gateways offers numerous advantages:

- Simplified client interaction with microservices, presenting a unified API interface

- Centralized authentication and security management, reducing the burden on individual services

- Ability to handle protocol translations (e.g., REST to gRPC), enabling flexibility in backend technology choices

- Enhanced observability of API usage and performance, facilitating data-driven decision making

Real-World Scenario: Mobile Banking Application

Consider a mobile banking application that needs to access various backend services for account information, transactions, and customer support. An API gateway can provide a single entry point for the mobile app, handling authentication, routing requests to the appropriate microservices, and aggregating responses. This approach simplifies the mobile app's architecture and allows backend services to evolve independently without affecting the client. The API gateway can also implement rate limiting to prevent abuse, translate between different API versions to support older app versions, and provide detailed analytics on API usage patterns.

Crafting a Comprehensive Network Architecture

Now that we've explored each component individually, let's examine how they can work together in a modern web application architecture:

[Clients] -> [CDN] -> [Load Balancer] -> [API Gateway] -> [Microservices]

|

[Reverse Proxy]

|

[Application Servers]

In this sophisticated architecture:

- Clients send requests to the application.

- A Content Delivery Network (CDN) serves static content and redirects dynamic requests.

- The Load Balancer distributes incoming traffic across multiple instances for optimal performance.

- The Reverse Proxy handles SSL termination and caching, offloading these tasks from backend servers.

- The API Gateway manages authentication, rate limiting, and request routing to microservices.

- Finally, the requests reach the appropriate Microservices, which process the requests and generate responses.

This multi-layered setup provides multiple tiers of scalability, security, and performance optimization, creating a robust foundation for modern web applications.

Conclusion: Empowering Your Network Architecture

Mastering the intricacies of load balancers, reverse proxies, forward proxies, and API gateways is crucial for designing resilient and scalable network systems. Each component plays a vital role in handling traffic, enhancing security, and optimizing performance, working in concert to create a harmonious and efficient web architecture.

As you embark on designing and building your own systems, consider how these powerful tools can be applied to solve specific challenges unique to your application. Remember that the most effective architecture is one that not only meets your current requirements but also provides flexibility for future growth and adaptation.

By internalizing these concepts and understanding their practical applications, you're well-equipped to create robust, high-performance network architectures capable of handling the demands of modern web applications. Continue to explore, experiment, and learn, and you'll find yourself able to tackle even the most complex system design challenges with confidence and expertise.

In the ever-evolving landscape of web technologies, staying informed about these foundational components will serve you well, enabling you to build scalable, secure, and efficient systems that can withstand the test of time and the demands of an increasingly connected world.