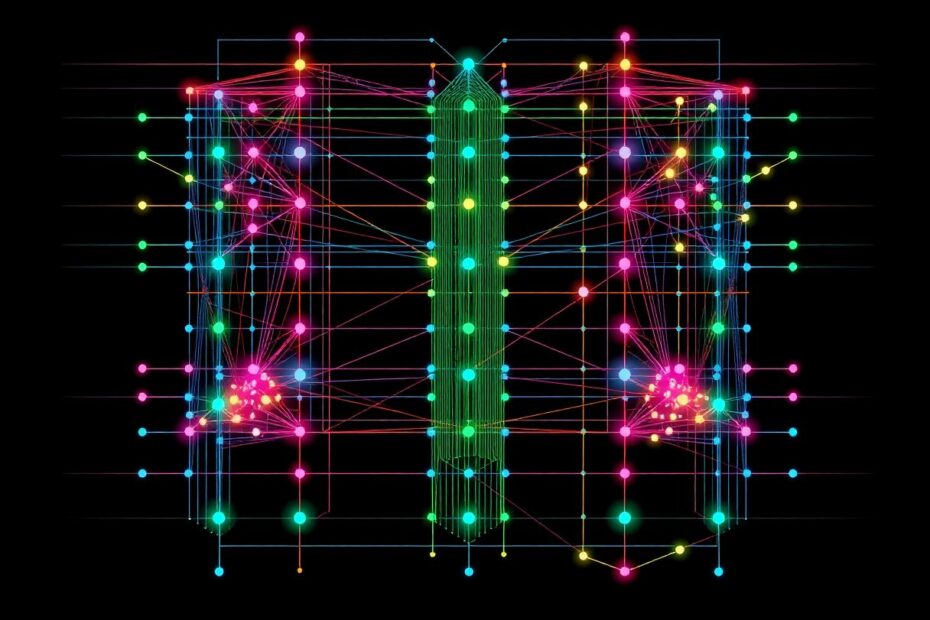

In the ever-evolving landscape of artificial intelligence, neural networks stand as the cornerstone of modern machine learning. These powerful computational models, inspired by the human brain, have revolutionized our approach to solving complex problems across various domains. At the heart of these neural networks lie their fundamental components: layers. This comprehensive guide will take you on an in-depth journey through the world of neural network layers, exploring their functions, applications, and the intricate details of their implementation.

The Foundation: Dense Layers

Dense layers, also known as fully connected layers, form the backbone of many neural network architectures. These layers serve as the workhorses of neural networks, connecting every neuron in one layer to every neuron in the subsequent layer. This comprehensive connectivity allows for complex pattern recognition and decision-making processes that are essential for a wide range of AI applications.

The Inner Workings of Dense Layers

At their core, dense layers perform a linear transformation on their input, followed by the application of an activation function. This process can be mathematically represented as:

y = f(Wx + b)

Where:

- x is the input vector

- W is the weight matrix

- b is the bias vector

- f is the activation function

This seemingly simple equation encapsulates the power of dense layers to learn and represent complex relationships within data. The weight matrix W and bias vector b are learned during the training process, allowing the network to adapt to the specific patterns and features present in the training data.

Implementing Dense Layers in Popular Frameworks

For those looking to implement dense layers in their own projects, popular deep learning frameworks like TensorFlow and PyTorch offer straightforward ways to create these layers:

In TensorFlow:

import tensorflow as tf

dense_layer_tf = tf.keras.layers.Dense(units=64, activation='relu')

In PyTorch:

import torch.nn as nn

dense_layer_pytorch = nn.Linear(in_features=32, out_features=64)

activation = nn.ReLU()

These implementations showcase the simplicity with which developers can incorporate dense layers into their neural network architectures. The flexibility of dense layers makes them suitable for a wide range of applications, from simple classification tasks to complex multi-layer perceptrons that can tackle intricate problem domains.

Convolutional Layers: Revolutionizing Image Processing

While dense layers excel in many areas, the field of computer vision demanded a more specialized approach. Enter convolutional layers, which have transformed the way we process and analyze visual data. These layers apply filters (also known as kernels) to input data, enabling the network to detect features such as edges, textures, and patterns with remarkable efficiency.

The Magic Behind Convolutions

The core operation in convolutional layers is the convolution itself, which can be expressed mathematically as:

O(i,j) = Σ Σ I(i+m, j+n) * K(m,n)

Where:

- O is the output feature map

- I is the input image or feature map

- K is the kernel or filter

This operation allows the network to learn local features in a hierarchical manner, building up from simple edge detectors in early layers to complex feature recognizers in deeper layers. This hierarchical learning is key to the success of convolutional neural networks (CNNs) in tasks such as image classification, object detection, and image segmentation.

Bringing Convolutional Layers to Life

Implementing convolutional layers in modern deep learning frameworks is straightforward:

In TensorFlow:

conv_layer_tf = tf.keras.layers.Conv2D(filters=32, kernel_size=(3,3), activation='relu')

In PyTorch:

conv_layer_pytorch = nn.Conv2d(in_channels=3, out_channels=32, kernel_size=3, padding=1)

These implementations allow developers to easily incorporate convolutional layers into their models, enabling the creation of sophisticated CNNs that have achieved state-of-the-art results in numerous computer vision tasks.

Recurrent Layers: Mastering Sequential Data

While dense and convolutional layers excel at processing fixed-size inputs, many real-world problems involve sequential data of varying lengths. Recurrent layers address this challenge by maintaining an internal state that captures information from previous time steps. This makes them ideal for tasks involving time series analysis, natural language processing, and speech recognition.

The Recurrent Neuron: A Memory-Enabled Powerhouse

At the heart of recurrent layers is the recurrent neuron, which updates its hidden state based on the current input and the previous hidden state:

h_t = f(W_x * x_t + W_h * h_(t-1) + b)

Where:

- h_t is the current hidden state

- x_t is the current input

- h_(t-1) is the previous hidden state

- W_x and W_h are weight matrices

- b is the bias vector

- f is the activation function

This recurrent structure allows the network to maintain a form of memory, enabling it to process sequences of arbitrary length and capture long-term dependencies in the data.

Implementing Recurrent Layers in Practice

Creating recurrent layers in modern deep learning frameworks is straightforward:

In TensorFlow:

rnn_layer_tf = tf.keras.layers.SimpleRNN(units=64, activation='tanh')

In PyTorch:

rnn_layer_pytorch = nn.RNN(input_size=32, hidden_size=64, num_layers=1)

While these simple recurrent layers can be effective for some tasks, more advanced variants like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) have been developed to address the challenges of learning long-term dependencies. These advanced recurrent architectures have become the go-to choice for many sequential data processing tasks.

Attention Layers: Focusing on What Matters

In recent years, attention layers have emerged as a powerful tool in neural network design, particularly in natural language processing tasks. These layers allow the network to focus on relevant parts of the input when producing output, leading to improved performance on many tasks.

The Attention Mechanism Unveiled

The core idea behind attention is to compute a weighted sum of input values based on their relevance to a given context. The attention weights are typically computed as:

attention_weights = softmax(Q * K^T / sqrt(d_k))

Where:

- Q is the query matrix

- K is the key matrix

- d_k is the dimension of the keys

This mechanism allows the network to dynamically focus on different parts of the input sequence, greatly enhancing its ability to process long sequences and capture complex dependencies.

Implementing Attention in Modern Frameworks

Here's a simple implementation of an attention layer:

In TensorFlow:

attention_layer_tf = tf.keras.layers.Attention()

In PyTorch:

class Attention(nn.Module):

def __init__(self, dim):

super().__init__()

self.dim = dim

self.query = nn.Linear(dim, dim)

self.key = nn.Linear(dim, dim)

self.value = nn.Linear(dim, dim)

def forward(self, x):

q = self.query(x)

k = self.key(x)

v = self.value(x)

scores = torch.matmul(q, k.transpose(-2, -1)) / math.sqrt(self.dim)

attention_weights = torch.softmax(scores, dim=-1)

return torch.matmul(attention_weights, v)

Attention layers have become a crucial component in state-of-the-art models like Transformers, which have achieved remarkable results in various natural language processing tasks, including machine translation, text summarization, and question answering.

Specialized Layers: Enhancing Network Performance

Beyond the fundamental layer types, researchers and practitioners have developed several specialized layers to address specific challenges in neural network training and improve overall performance. These layers often serve as crucial enhancements to the core architecture, enabling more robust and efficient learning.

Batch Normalization: Stabilizing the Learning Process

Batch normalization layers normalize the inputs to each layer, which can help stabilize and accelerate training. The operation can be expressed as:

y = γ * (x – μ) / sqrt(σ^2 + ε) + β

Where:

- μ is the batch mean

- σ^2 is the batch variance

- γ and β are learnable parameters

- ε is a small constant for numerical stability

By normalizing the inputs to each layer, batch normalization helps mitigate the internal covariate shift problem, allowing for higher learning rates and faster convergence. Implementing batch normalization is straightforward in modern frameworks:

In TensorFlow:

batch_norm_tf = tf.keras.layers.BatchNormalization()

In PyTorch:

batch_norm_pytorch = nn.BatchNorm2d(num_features=64)

Dropout: A Powerful Regularization Technique

Dropout layers randomly set a fraction of input units to 0 during training, which helps prevent overfitting. The dropout operation can be represented as:

y = x * mask, where mask ~ Bernoulli(p)

Where p is the probability of keeping a unit active. This simple yet effective technique has become a staple in many neural network architectures, improving generalization and reducing overfitting. Implementing dropout is straightforward:

In TensorFlow:

dropout_tf = tf.keras.layers.Dropout(rate=0.5)

In PyTorch:

dropout_pytorch = nn.Dropout(p=0.5)

LSTM and GRU: Advanced Recurrent Architectures

Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) layers are more sophisticated variants of recurrent layers that can better handle long-term dependencies in sequential data. These architectures introduce gating mechanisms that allow the network to selectively update, forget, or output information from its internal state.

Implementing these advanced recurrent layers is straightforward in modern frameworks:

In TensorFlow:

lstm_tf = tf.keras.layers.LSTM(units=64)

gru_tf = tf.keras.layers.GRU(units=64)

In PyTorch:

lstm_pytorch = nn.LSTM(input_size=32, hidden_size=64, num_layers=1)

gru_pytorch = nn.GRU(input_size=32, hidden_size=64, num_layers=1)

These advanced recurrent architectures have become the go-to choice for many sequence processing tasks, offering improved performance over simple RNNs in capturing long-term dependencies.

The Future of Neural Network Layers

As we look to the future, the field of neural network architecture design continues to evolve at a rapid pace. Researchers are constantly developing new layer types and refining existing ones to address the challenges of modern AI applications. Some emerging trends in layer design include:

Sparse Attention Mechanisms: Building on the success of attention layers, researchers are exploring more efficient attention mechanisms that can scale to even longer sequences and larger datasets.

Neural Architecture Search (NAS): Automated methods for discovering optimal layer combinations and architectures are gaining traction, potentially leading to novel layer designs tailored for specific tasks.

Quantum-Inspired Layers: As quantum computing advances, researchers are exploring ways to incorporate quantum-inspired computations into classical neural networks, potentially leading to new types of quantum-classical hybrid layers.

Neuromorphic Computing Layers: Inspired by the efficiency of biological neural networks, researchers are developing new layer types that more closely mimic the behavior of neurons in the brain, potentially leading to more energy-efficient AI systems.

Conclusion: Mastering the Building Blocks of AI

Understanding the various types of neural network layers is crucial for designing effective deep learning models. From the foundational dense layers to the specialized attention mechanisms, each layer type offers unique capabilities that can be combined to solve complex problems in artificial intelligence.

As you continue to explore the world of neural networks, remember that the choice of layers and their configuration can significantly impact your model's performance. Experiment with different layer combinations, and don't be afraid to mix and match to create novel architectures tailored to your specific tasks.

By mastering these building blocks, you'll be well-equipped to tackle a wide range of machine learning challenges and contribute to the ever-evolving field of artificial intelligence. The future of AI is being built layer by layer, and with a deep understanding of these fundamental components, you'll be at the forefront of this exciting technological revolution.

As we stand on the brink of new breakthroughs in AI, the layers we've explored today will undoubtedly play a crucial role in shaping the intelligent systems of tomorrow. Keep learning, keep experimenting, and keep pushing the boundaries of what's possible with neural networks. The future of AI is in your hands, and it starts with understanding the power and potential of neural network layers.