In the ever-evolving landscape of machine learning and data analysis, radial basis functions (RBFs) stand out as powerful mathematical tools that enable the transformation of complex, non-linear data into more manageable forms. As we delve into the world of RBFs, we'll explore their types, advantages, and real-world applications, shedding light on why these functions have become indispensable in various fields of study and industry.

Understanding Radial Basis Functions

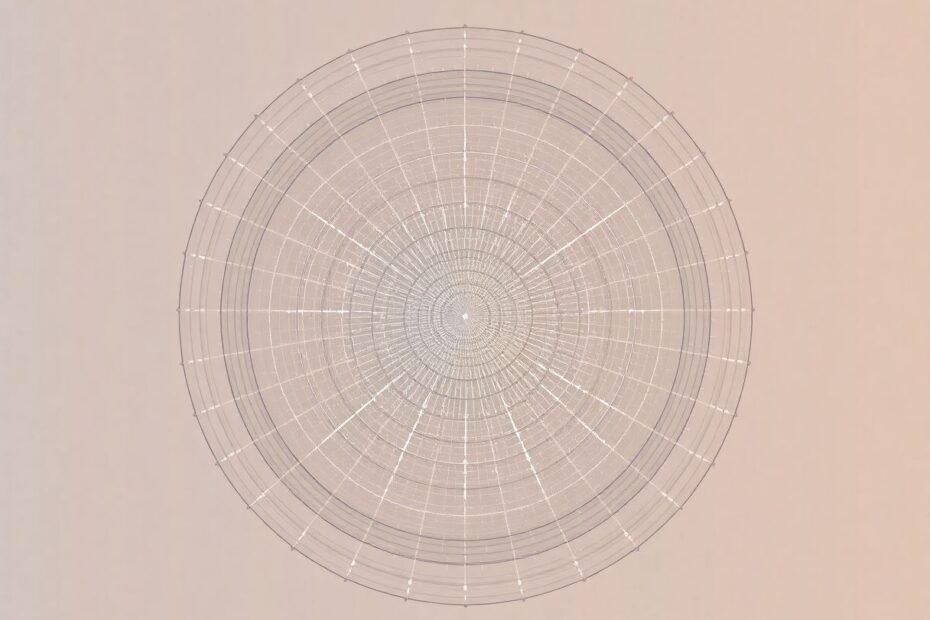

At their core, radial basis functions are a class of mathematical functions that operate based on the distance between a given input point and a fixed reference point in multi-dimensional space. The term "radial" stems from the fact that these functions produce symmetric, radially decreasing outputs as one moves away from the center point. This behavior can be likened to the ripples formed when a pebble is thrown into a calm pond, with the intensity decreasing as the distance from the point of impact increases.

Mathematically, an RBF φ is expressed as φ(x) = φ(||x – c||), where x represents the input vector, c is the center vector, and || || denotes the Euclidean norm or distance. This elegant formulation allows RBFs to capture intricate relationships in data that might elude linear models, making them invaluable in various machine learning tasks.

Types of Radial Basis Functions

Several types of radial basis functions exist, each with unique properties suited for different applications. Let's explore some of the most common and widely used RBFs:

Gaussian RBF

The Gaussian RBF is arguably the most popular and well-known type. Defined as φ(r) = exp(-r^2 / (2σ^2)), where r is the radius and σ is a parameter controlling the function's width, it produces a smooth, bell-shaped curve. This function is particularly effective for problems involving continuous, smooth data distributions and is often the go-to choice for many machine learning practitioners due to its versatility and well-understood properties.

Multiquadric RBF

The Multiquadric RBF, defined as φ(r) = sqrt(r^2 + ε^2), where ε is a shape parameter, increases monotonically with distance from the center. This characteristic makes it especially useful for interpolation problems where capturing global trends in data is crucial. Engineers and scientists often employ this function when dealing with scattered data points in physical simulations or geographical modeling.

Inverse Multiquadric RBF

As the name suggests, the Inverse Multiquadric RBF is the inverse of its counterpart, expressed as φ(r) = 1 / sqrt(r^2 + ε^2). This function decreases monotonically with distance, making it ideal for localized interpolation and smoothing tasks. It's particularly useful in scenarios where local features of the data are more important than global trends, such as in certain signal processing applications or local weather prediction models.

Thin Plate Spline RBF

The Thin Plate Spline RBF, defined as φ(r) = r^2 * log(r), finds its niche in 2D interpolation problems. It's frequently employed in image warping and registration tasks, making it a favorite among computer vision researchers and practitioners working on image processing applications.

Advantages of Radial Basis Functions

The popularity of radial basis functions in machine learning and data analysis stems from several key advantages:

Firstly, RBFs excel at capturing non-linear relationships in data. This ability to model complex patterns that linear methods might miss makes them invaluable in a wide range of real-world applications where data rarely follows simple linear trends.

Secondly, depending on the chosen RBF, these functions can capture both local and global features of data distributions. This flexibility allows practitioners to tailor their approach based on the specific requirements of their problem, whether it's focusing on fine-grained local details or broader, overarching patterns.

Thirdly, RBF networks often offer a degree of interpretability that's lacking in some other complex machine learning models. The hidden layer nodes in an RBF network have a clear geometric interpretation, providing insights into what the model is learning and how it's making decisions.

Furthermore, RBF networks typically require fewer training iterations compared to other neural network architectures, leading to more efficient training processes. This can be a significant advantage when working with large datasets or in time-sensitive applications.

Another crucial advantage is the universal approximation capability of RBF networks. Theoretical studies have shown that these networks can approximate any continuous function to arbitrary precision, given sufficient nodes. This property ensures that RBF networks can, in principle, model even the most complex relationships in data.

Lastly, many RBFs, particularly the Gaussian RBF, exhibit good tolerance to input noise. This robustness makes them well-suited for real-world applications where data is often noisy or imperfect.

Real-World Applications of Radial Basis Functions

The versatility and power of radial basis functions have led to their adoption across a wide range of fields and problem domains. Let's explore some of the most impactful use cases:

In the realm of function approximation and interpolation, RBFs shine brightly. Financial analysts leverage RBFs to predict stock prices and option values based on historical data, enabling more informed investment decisions. Engineers use these functions to model complex physical systems and processes, from fluid dynamics to structural analysis. Climate scientists employ RBFs to interpolate climate data across geographical regions, helping to fill gaps in observational data and improve climate models.

Pattern recognition and classification tasks have also benefited greatly from RBFs. In the field of computer vision, RBF-based models are used for image recognition tasks, from classifying objects in photographs to detecting anomalies in medical imaging. Handwriting recognition systems, which convert handwritten text to digital form, often rely on RBF networks to handle the variability in human handwriting. Speech recognition systems, too, have incorporated RBFs to improve their ability to identify spoken words and phrases across different accents and speaking styles.

Time series analysis and forecasting represent another area where RBFs have made significant contributions. Meteorologists use RBF-based models for weather forecasting, predicting future conditions based on historical data and current observations. Economists employ similar techniques for economic forecasting, projecting indicators like GDP growth, inflation rates, and market trends. In the energy sector, load forecasting models based on RBFs help predict electricity demand, enabling more efficient power grid management and reducing the risk of blackouts.

Control systems across various industries have also embraced RBF networks. In robotics, these functions are used to implement adaptive control for robot arm movements, allowing for more precise and flexible manipulation. Autonomous vehicle systems utilize RBFs in their navigation and obstacle avoidance algorithms, contributing to safer and more reliable self-driving capabilities. Industrial process control systems leverage RBFs to optimize manufacturing processes, improving efficiency and product quality.

In the domain of signal processing, RBFs find numerous applications. Audio engineers use RBF-based algorithms for noise reduction, filtering out unwanted sounds from recordings. In telecommunications, RBFs aid in signal reconstruction, helping to recover original signals from incomplete or corrupted data. Feature extraction tasks, crucial in many machine learning pipelines, often employ RBFs to identify key characteristics in complex signals for further analysis.

Implementing Radial Basis Functions: A Practical Approach

To illustrate the practical implementation of RBFs, let's consider a simple example using Python and the scikit-learn library. The following code demonstrates how to create and train an RBF network for a binary classification task:

from sklearn.neural_network import MLPClassifier

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Generate a synthetic classification dataset

X, y = make_classification(n_samples=1000, n_features=20, n_classes=2, random_state=42)

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train an RBF Network

rbf_network = MLPClassifier(hidden_layer_sizes=(100,), activation='rbf', random_state=42)

rbf_network.fit(X_train, y_train)

# Make predictions on the test set

y_pred = rbf_network.predict(X_test)

# Calculate and print the accuracy

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy:.2f}")

This code creates a simple RBF network using the MLPClassifier from scikit-learn, specifying the 'rbf' activation function. After training on the synthetic dataset, it evaluates the model's performance on a test set, providing a practical demonstration of how RBFs can be applied to real-world classification problems.

Challenges and Future Directions

While radial basis functions offer numerous advantages, they are not without challenges. The curse of dimensionality can pose difficulties as the number of input features increases, potentially leading to computational bottlenecks. Selecting appropriate centers for RBF nodes and tuning parameters like the Gaussian function width can significantly impact performance, requiring careful consideration and often empirical testing.

Looking to the future, research in radial basis functions continues to evolve. Hybrid models that combine RBFs with other machine learning techniques, such as support vector machines or deep neural networks, are being explored to create more powerful and flexible systems. Adaptive RBF networks that can automatically adjust their structure and parameters during training are another area of active research, promising to make these models even more accessible and effective.

The advent of quantum computing also opens up exciting possibilities for RBF algorithms. Quantum-inspired RBF implementations could potentially solve complex problems more efficiently, pushing the boundaries of what's possible in fields like cryptography, optimization, and simulation.

As the field of explainable AI gains prominence, the interpretability of RBF networks makes them an attractive subject of study. Researchers are working on methods to extract meaningful insights from RBF models, contributing to the development of more transparent and trustworthy AI systems.

In conclusion, radial basis functions represent a powerful and versatile tool in the machine learning arsenal. Their ability to capture complex, non-linear relationships in data, combined with their efficiency and interpretability, ensures their continued relevance in an increasingly data-driven world. As we push the boundaries of artificial intelligence and data analysis, RBFs will undoubtedly play a crucial role in shaping the future of intelligent systems across a wide range of industries and applications.