In the ever-evolving landscape of technology, artificial intelligence (AI) stands as a beacon of innovation, promising to revolutionize industries and reshape our daily lives. However, the path to successful AI implementation is often misunderstood and fraught with challenges. This comprehensive guide explores the AI Hierarchy of Needs, a crucial framework that outlines the foundational elements required for effective AI deployment and long-term success.

Understanding the AI Hierarchy of Needs

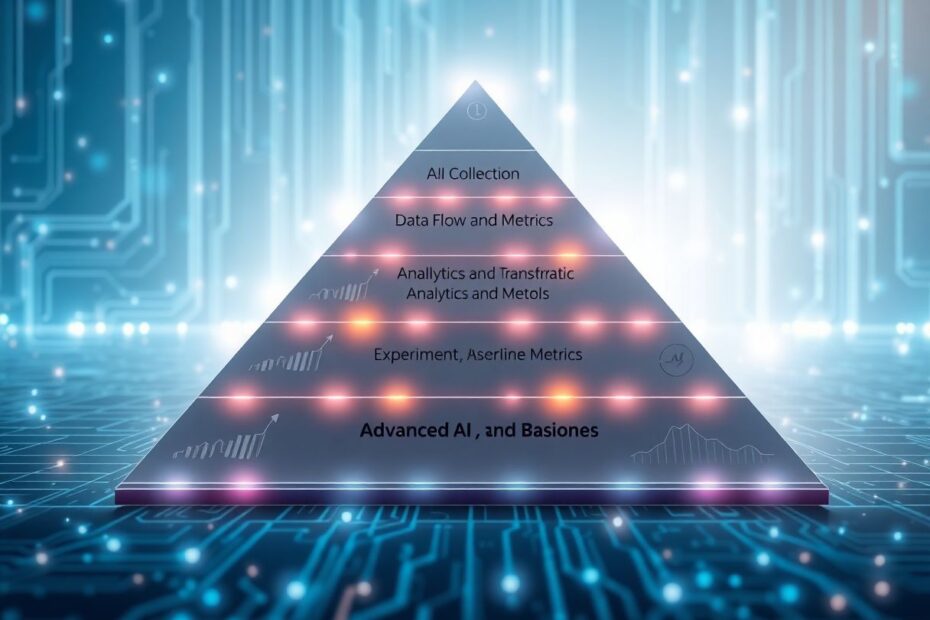

The AI Hierarchy of Needs is a conceptual model that draws inspiration from Maslow's Hierarchy of Needs in psychology. Just as Maslow's theory suggests that basic human needs must be met before higher-level aspirations can be pursued, the AI Hierarchy posits that certain fundamental requirements must be satisfied before organizations can achieve advanced AI capabilities.

This pyramid-like structure consists of six levels, each building upon the previous one:

- Data Collection

- Data Flow and Infrastructure

- Data Exploration and Transformation

- Analytics and Metrics

- Experimentation and Baseline Models

- Advanced AI and Machine Learning

Let's delve into each level to understand its significance and the technical considerations involved.

The Foundation: Data Collection

At the base of the AI Hierarchy lies data collection, the bedrock upon which all AI initiatives are built. This crucial step involves identifying relevant data sources, implementing robust logging systems, and ensuring comprehensive coverage of user interactions or sensor data.

The Importance of Diverse Data Sources

In the pursuit of AI excellence, organizations must cast a wide net when it comes to data collection. This means going beyond traditional structured data and embracing unstructured data sources such as text, images, audio, and video. According to a report by IDC, unstructured data is growing at a rate of 55-65% per year, and by 2025, it is estimated that 80% of all data will be unstructured.

For instance, a healthcare AI system might need to process not just electronic health records, but also medical imaging data, patient-reported outcomes, and even data from wearable devices. This diverse dataset provides a more holistic view of patient health, enabling more accurate diagnoses and personalized treatment plans.

Implementing Robust Logging Systems

Efficient data collection requires sophisticated logging systems that can capture granular details without compromising system performance. Modern logging frameworks like ELK Stack (Elasticsearch, Logstash, and Kibana) or Splunk offer real-time log analysis and visualization capabilities, enabling organizations to monitor data collection processes and identify any gaps or anomalies promptly.

Ensuring Data Quality and Coverage

Data quality is paramount in AI systems. Poor quality data can lead to biased or inaccurate models, undermining the entire AI initiative. Organizations must implement data validation processes to ensure accuracy, completeness, and consistency of collected data.

Moreover, comprehensive coverage is crucial. For example, an e-commerce platform should track not just successful purchases, but also abandoned carts, search queries, and browsing patterns. This holistic approach provides a complete picture of user behavior, enabling more nuanced AI models down the line.

Level 2: Data Flow and Infrastructure

Once data is collected, it needs to flow seamlessly through the organization's systems. This level focuses on creating reliable data streams, implementing Extract, Transform, Load (ETL) processes, and designing efficient data storage solutions.

Building Scalable Data Pipelines

Modern data pipelines must be designed with scalability in mind. Technologies like Apache Kafka or Amazon Kinesis allow for real-time data streaming, capable of handling millions of events per second. These systems ensure that data flows smoothly from source to destination, maintaining data integrity and enabling real-time analytics.

The Role of Cloud Computing

Cloud platforms have revolutionized data infrastructure, offering scalable and cost-effective solutions for data storage and processing. According to Gartner, by 2025, over 95% of new digital workloads will be deployed on cloud-native platforms, up from 30% in 2021. Cloud services like Amazon S3, Google Cloud Storage, or Azure Data Lake Storage provide virtually unlimited storage capacity, while services like AWS Glue or Azure Data Factory simplify ETL processes.

Data Lakes vs. Data Warehouses

Organizations must choose between data lakes and data warehouses based on their specific needs. Data lakes, which store raw, unprocessed data, are ideal for organizations that require flexibility and want to perform advanced analytics on diverse data types. On the other hand, data warehouses store structured, processed data and are better suited for organizations that need quick access to well-defined datasets for business intelligence and reporting.

Level 3: Data Exploration and Transformation

With data flowing freely, the next step is to make sense of it through exploration and transformation. This level involves data cleaning, preprocessing, exploratory data analysis, and feature engineering.

The Power of Data Cleaning

Data cleaning is a critical yet often underestimated step in the AI pipeline. According to a survey by Anaconda, data scientists spend about 45% of their time on data preparation tasks, including cleaning and organizing data. Tools like OpenRefine or Trifacta can significantly streamline this process, allowing data scientists to focus on more value-added tasks.

Exploratory Data Analysis (EDA)

EDA is the process of analyzing datasets to summarize their main characteristics, often using visual methods. Python libraries like Pandas, Matplotlib, and Seaborn have become indispensable tools for data scientists performing EDA. These tools allow for quick visualization of data distributions, correlations, and trends, providing insights that guide further analysis and model development.

Feature Engineering

Feature engineering is the art of creating new input features for machine learning models. This process requires domain knowledge and creativity. For instance, in a financial fraud detection system, engineers might create features like transaction frequency, average transaction amount, or time since last transaction. These engineered features often prove more predictive than raw data alone.

Level 4: Analytics and Metrics

Building on the previous level, this stage focuses on creating dashboards and reports, performing basic segmentation, identifying trends and anomalies, and preparing training data for machine learning models.

The Rise of Self-Service Analytics

Modern analytics platforms like Tableau, Power BI, or Looker have democratized data analysis, allowing non-technical users to create sophisticated dashboards and reports. This shift towards self-service analytics enables faster decision-making and reduces the burden on data teams.

Advanced Segmentation Techniques

While basic segmentation (e.g., by demographics or purchase history) is valuable, advanced techniques like behavioral segmentation or predictive segmentation can provide deeper insights. For example, a streaming service might use machine learning to segment users based on their viewing patterns, enabling more personalized content recommendations.

Anomaly Detection

Identifying anomalies in data can uncover valuable insights or potential issues. Techniques like Isolation Forests or Autoencoders can automatically detect unusual patterns in data, which is particularly useful in areas like cybersecurity or predictive maintenance.

Level 5: Experimentation and Baseline Models

Before diving into complex AI models, it's crucial to establish a culture of experimentation and deploy simple baseline models. This level involves implementing A/B testing frameworks, establishing performance benchmarks, and creating a data-driven decision-making culture.

The Importance of A/B Testing

A/B testing allows organizations to make data-driven decisions by comparing two versions of a variable (web page, app feature, marketing email, etc.) to determine which performs better. Platforms like Optimizely or Google Optimize have made A/B testing accessible to organizations of all sizes.

Baseline Models: The Unsung Heroes

Simple baseline models often outperform complex algorithms, especially when data quality is poor or the problem is not well understood. For instance, a "most popular" recommendation system might perform surprisingly well compared to more sophisticated collaborative filtering approaches. These baseline models serve as benchmarks against which more advanced models can be compared.

Fostering a Data-Driven Culture

Creating a data-driven culture is as much about people and processes as it is about technology. Organizations must invest in data literacy programs, establish clear data governance policies, and incentivize data-driven decision-making at all levels.

The Summit: Advanced AI and Machine Learning

At the top of the hierarchy, we reach advanced AI applications. This level involves implementing sophisticated machine learning algorithms, deploying deep learning models, and integrating AI into core business processes.

The Rise of AutoML

Automated Machine Learning (AutoML) tools like Google's Cloud AutoML or H2O.ai are democratizing AI development, allowing organizations to build custom machine learning models with minimal expertise. These tools automate tasks like feature selection, algorithm selection, and hyperparameter tuning.

Deep Learning and Neural Networks

Deep learning, a subset of machine learning based on artificial neural networks, has revolutionized fields like computer vision and natural language processing. Frameworks like TensorFlow and PyTorch have made it easier for organizations to implement deep learning models, even for complex tasks like image recognition or language translation.

Reinforcement Learning: The Next Frontier

Reinforcement learning, where AI agents learn to make decisions by interacting with an environment, is pushing the boundaries of what's possible with AI. From game-playing AIs like AlphaGo to autonomous vehicles, reinforcement learning is opening up new frontiers in AI applications.

Conclusion: Embracing the AI Journey

The AI Hierarchy of Needs serves as a roadmap for organizations aspiring to leverage the power of artificial intelligence. It reminds us that AI is not a magical solution but the result of careful planning, robust infrastructure, and a culture of data-driven decision-making.

By respecting this hierarchy and building methodically from the bottom up, organizations can develop AI capabilities that are not only powerful but also sustainable and trustworthy. The journey to AI mastery is long and challenging, but the rewards – in terms of improved efficiency, enhanced customer experiences, and new business opportunities – are immense.

As we look to the future, the AI Hierarchy of Needs will likely evolve. New levels may emerge, such as "AI Ethics and Governance" or "Explainable AI," reflecting the growing importance of responsible AI development. The line between traditional analytics and AI may continue to blur, and automated tools may compress some of the lower levels of the hierarchy.

However, the core principle of building a strong foundation before advancing to complex AI applications will remain relevant. In the world of AI, the quality of your foundation determines the height of your success. Start from the bottom, build methodically, and watch as your AI capabilities grow to new heights, transforming your organization and delivering unprecedented value to your customers.