Are you a Python developer looking to scrape data from Twitter using the Python programming language? Then you are on the right page, as the article below describes the step-by-step guide on how to code a Twitter scraper using Python.

Twitter is a social media platform that is built around the posting of short 140-character messages known as tweets. If you need to post more than that, then you’ll need to create multiple tweets together in a thread. This simple idea has earned Twitter over 237.8 million active users.

These users generate up to 500 million tweets daily and around 200 billion tweets yearly. These tweets carry with their ideas, sentiments, and commentary on social issues. As a researcher or marketer, these tweets are valuable data that can be cleaned and analyzed.

But collecting them manually can be tiring, time-wasting, and even impossible to achieve for a large number of tweets. The only credible alternative is to use an automation bot to collect the required data. Using a Twitter scraper is faster and cheaper than doing so manually. There are already-made no-code Twitter scrapers out there. But if you are a coder, you can develop your own Twitter scraper. This article focuses on how to scrape Twitter using Python.

An Overview of Twitter Scraping

Twitter scraping is the process of using automation bots known as Twitter scrapers to collect publicly available content on the Twitter platform. These web scrapers send requests for a specific Twitter web page; then when the content is returned, it uses a parser to transverse it and extract the required content.

It is the best method to collect data from the Twitter platform because of the kind of restrictions the Twitter API has. One thing you need to know about Twitter is that it is not completely anti-bot. In fact, it does support some form of automation but not necessarily web scrapers.

Because of its support for some level of automation, it means that you could get away with scraping data from the platform easily compared to many other social media platforms.

Even at that, you need to know that Twitter does have an anti-bot system in place to ward off bots from it platform. If you want to develop a Python Twitter scraper, you will need to integrate anti-blocking systems to be able to successfully evade detection. Only when you are able to do that will you be able to scrape data at any reasonable scale.

What Data Can You Scrape from Twitter

What kind of data can you possibly collect from Twitter? Frankly speaking, you can collect any publicly available data you can find on the platform. The important data on Twitter can be classified as follows.

- Tweets: Perhaps tweets are the most important data points on Twitter. Social researchers scrape tweets to carry out numerous analyses, including the famous sentimental analysis, to know what people think about a product or an issue. Scraping tweets is easy because of the use of hashtags. You can also search tweets by words or phrases too.

- Profile: Other important data points on the Twitter platform are profiles. As stated earlier, there are over 237.8 million active users. You can scrape the important details of the profiles for marketing and other reasons. Data you can scrape about a particular profile includes username, bio, followers and those the profile is following, location, date joined, and number of tweets, among others. You can also scrape tweets associated with specific profiles too.

- Generic Data: Sometimes, you are after data that can be found everywhere. These data can be email addresses, phone numbers, website URLs, and what have you. If you are in this situation, you will need to scrape a full tweet and then make use of Regular Expression (Regex) to parse out these specific data points.

Tools for Scraping Twitter Using Python

Scraping Twitter with Python is easy. With even an advanced beginner’s knowledge in Python, you can successfully scrape data from Twitter. You just need to make use of the right tools and techniques to download the web page and then extract the specific data of interest on the page.

In the past, you could use the likes of Requests and BeautifulSoup to scrape Twitter. However, it now depends on Javascript rendering, making requests useless. Below are the common tools used for scraping Twitter.

- Selenium: Selenium is the de-facto tool for scraping pages that depend on Javascript for Python developers. Selenium web driver automates popular browsers like Chrome and Firefox. You can use it to render pages on Twitter and then access data present on the page using the API provided by the same Selenium.

- Tweepy: There is a Twitter API with limitations meant for automation — and Tweepy is the Python library that makes it easy for you to use this API. It integrates with the API, making it easy for you to scrape tweets and perform complex queries with simple commands. You will need to register for a Twitter developer account to use this tool. It is also important you know that there are a lot of limitations you will have to deal with when using Tweepy, like accessing historical data.

- Snscrape: Snscrape is another python library that does not use the Twitter API. And as such, there are no limitations to the number of tweets and the period you can scrape for. However, you will need proxies if you need to send too many web requests, as you will easily get blocked. Snscrape is also not meant for scraping only Twitter — you can use it to scrape other social media platforms like Facebook, Instagram, Reddit, and Telegram, among others.

- Pandas: Since we are dealing with data, we will need a library for handling data, and the Pandas package is the best for Python developers. This is used for data manipulation and analysis in data science.

- Proxies: With Tweepy, you will not need to make use of proxies as your usage will always be within the limit set by the Twitter API. However, if you go with either Selenium or Snscrape, then you will need to make use of proxies. While you can use private proxies, the management of Twitter is working on making the platform difficult for bots, and as such, we recommend you make use of residential proxies.

Steps to Scrape Twitter Using Python and Tweepy

In this section, we will move into the actual coding of the Twitter scraper using Python. We will be scraping tweets with specific users. The code will limit the number of tweets to 100. With this, there is no need to make use of proxies, nor will you have the need to bypass the Twitter API and use another tool. For this reason, we will make use of the Tweepy library.

Step 1: Install Necessary Libraries and Tools

You do not have much to install in this regard. All you need to install is Python and Tweepy — If you do not have Python installed already.

Python

You can’t run any Python script without having python installed on your computer. Most popular Operating Systems come with Python 2 installed. Unfortunately, this is not the Python used by most coders nowadays, as it is only added for legacy reasons. You need to install Python 3 from the official download page on Python’s website. It is available for popular programming languages.

Tweepy

Tweepy is the Python library for scraping tweets. We choose to use it instead of Selenium or Snscrape because of how easily it can help us get this done because of the peculiarity of the tasks at hand. This library is not part of the Python standard library, and as such, you will need to install it. Use the “pip install tweepy” command in your system command prompt.

Pandas

As with Tweepy, Pandas is also not available in the standard Python library and, as such, needs to be downloaded. You can use the pip installer to install it using the “pip install pandas’ command as you did in the case of Teeepy case.

Step 2: Twitter Developer Account Registration

Tweepy depends on the official Twitter API. And this required a developer account to use. Head over to the Twitter Developer Account webpage and create an account. You only need to answer a few questions to tell them what you intend to use Twitter for developers after which you are provided your credentials. These include the consumer key, consumer secret key, access token key, and access token secret key.

Step 3: Import Tweepy and Initialise the Tweepy API

The first step in coding is to import Tweepy, which is done by simply using the import statement together with tweepy (import tweepy). Then create variables for the Twitter developer credentials —- consumer key, consumer secret key, access token key, and access token secret key.

Lastly, pass the credentials to the Tweepy authentication object handler. You can then pass this to. The tweepy object to start using the user. We also imported pandas as dp. Below is a full code for all of these.

import tweepy import pandas as pd # fill the variables below with credential details from Twitter Develoepr Account consumer_key = "" consumer_secret = "" access_token = "" access_token_secret = "" #Pass in our twitter API authentication key auth = tweepy.OAuth1UserHandler( consumer_key, consumer_secret, access_token, access_token_secret ) api = tweepy.API(auth, wait_on_rate_limit=True)

Step 4: Specify Twitter Username Profile, Number of Tweets, and feed Data to Pandas

The next step is to specify the username of the profile you want to scrape its tweets. Let’s use the footballer Messi’s Twitter handle @TeamMessi. As stated earlier, the number of tweets to scrape is 100. Using list comprehension, we pulled out some of the tweet attributes and fed them to Pandas to create a data frame. Below is the code of how we did that.

username = "TeamMessi"

no_of_tweets = 100

try:

# The number of tweets we want to retrieved from the user

tweets = api.user_timeline(screen_name=username, count=no_of_tweets)

date_created = tweets.created_at

likes_count = tweets.favorite_count

tweet_source = tweets.source

tweet = tweets.text

# Pulling Some attributes from the tweet

attributes_container = [[date_created, likes_count, tweet_source, tweet] for i in tweets]

# Creation of column list to rename the columns in the dataframe

columns = ["Date Created", "Number of Likes", "Source of Tweet", "Tweet"]

# Creation of Dataframe

tweets_df = pd.DataFrame(attributes_container, columns=columns)

except BaseException as e:

print('Status Failed On,', str(e))Step 5: Restructure the Code

As the code is above, it works as if you call the tweets_df.head(100), you will see all of the tweets scraped. However, we will have to hardcode the username of a Twitter profile and the number of tweets we want to scrape. If you want to structure the code in such a way that you can programmatically change these values easily, you can restructure the code into a function that accepts username and number of tweets as variables. Below is the full code restructured into a function.

import tweepy

import pandas as pd

def scrape_tweets(username, number_of_tweets):

# fill the variables below with credential details from Twitter Develoepr Account

consumer_key = ""

consumer_secret = ""

access_token = ""

access_token_secret = ""

#Pass in our twitter API authentication key

auth = tweepy.OAuth1UserHandler(

consumer_key, consumer_secret,

access_token, access_token_secret

)

api = tweepy.API(auth, wait_on_rate_limit=True)

username = username

no_of_tweets = number_of_tweets

try:

# The number of tweets we want to retrieved from the user

tweets = api.user_timeline(screen_name=username, count=no_of_tweets)

date_created = tweets.created_at

likes_count = tweets.favorite_count

tweet_source = tweets.source

tweet = tweets.text

# Pulling Some attributes from the tweet

attributes_container = [[date_created, likes_count, tweet_source, tweet] for i in tweets]

# Creation of column list to rename the columns in the dataframe

columns = ["Date Created", "Number of Likes", "Source of Tweet", "Tweet"]

# Creation of Dataframe

tweets_df = pd.DataFrame(attributes_container, columns=columns)

except BaseException as e:

print('Status Failed On,', str(e))

c = scrape_tweets("TeamMessi", 100)Improvement to Make to the Code Above

If you look at the code above and you paid attention to all of what we wrote above, you will notice that this code is highly limited. Because it depends on the Twitter official API for developers, there is a limit to what you can do with it.

The real power of web scraping lies in the fact that you can bypass the limitations of APIs and scrape the data you want from web pages without restrictions. The best way to improve the code above is to rewrite it — this time, not using Tweep.

I mentioned Selenium and Snscrape as the other tools that can be used to scrape Twitter. They provide more freedom. However, you are sure to run into problems using them that Tweep.

This is because Twitter does not allow automation without its official API. For you to successfully use any of them, you will need to integrate anti-detection techniques like the use of rotating proxies to avoid getting detected. If you intend to use Selenium, then I recommend you use the undetected chromedriver together with Selenium to avoid detection.

How to Scrape Twitter Using Already-made Python Twitter Scraper

Developing your own Twitter scraper as a Python developer is good. But except you want to do it for the fun of it, or you have got all of the experience to avoid getting blocked, you might just want to make use of an already-made solution. Twitter is a popular target for web scraping, and Python is the de facto language for that. With this, there is a good number of already-made scrapers for you to make use of. You can check GitHub and any other search engine of your choice for that.

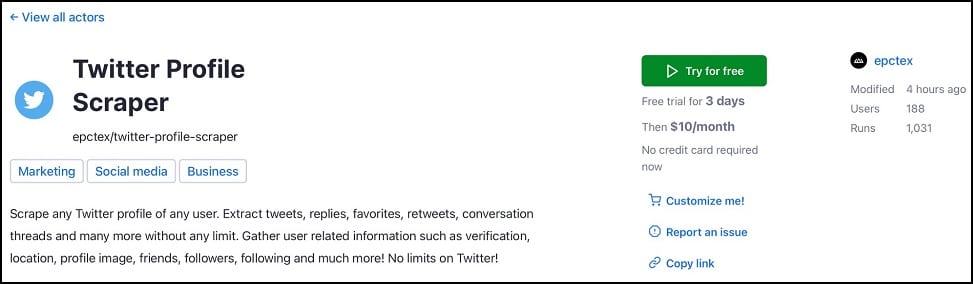

As for a recommendation, we recommend you make use of Apify. This service provides a host of web scrapers not only for Twitter but for most popular websites. Below is how to use the Twitter profile scraper to scrape profile details from Twitter.

Step 1: Visit the official website of Apify and register an account. You will be given an API Token that will be your authentication module.

Step 2: Install the Apify Python Client with this pip command — pip install apify-client”

Step 3: Head over to the page of the Twitter Profile Scraper on the Apify website.

Step 4: The tool is paid with a price tag of $10 monthly, but as a new user, you can use it for free for 3 days.

Step 5: You should read the document to know how to use it properly. The code below is an example code provided by Apify on how to make use of the Twitter Profile Scraper.

from apify_client import ApifyClient

client = ApifyClient("<YOUR_API_TOKEN>")

run_input = {

"startUrls": ["https://twitter.com/apify"],

"maxItems": 20,

"proxy": { "useApifyProxy": True },

}

run = client.actor("epctex/twitter-profile-scraper").call(run_input=run_input)

for item in client.dataset(run["defaultDatasetId"]).iterate_items():

print(item)How to Scrape Twitter Using Python Web Scraping API

There are 3 ways to scrape Twitter with Python. The two methods above are quite effective. But if you need a method that does the heavy lifting for you so you do not have to worry about getting blocked, managing proxies, and solving captchas, then using a web scraping API is the way to go.

With a web scraping API, all you have to do is send a simple web request and get back the data you want as a JSON response. There are a good number of web scraping APIs out there that can do that. But we one we trust the most to get the job done with a Python SDK is the Crawlbase Scraper API, formerly known as ProxyCrawl Scraper API.

FAQs

Q. What is Twitter Scraping?

Twitter scraping is the process of using automation bots to extract data from the Twitter platform. These automation bots for web data extraction are known as web scrapers.

For Twitter, there are specialized bots for it known as Twitter scrapers. With Twitter scrapers, you can scrape all publicly available data from Twitter. These range from user profiles to tweets, comments, and engagement data. Scraping Twitter is less difficult when the difficulty is compared to scraping the other social media platforms.

Q. Does Twitter Allow Scraping?

The answer to this is a YES and NO. Yes, because Twitter allows the extraction of data from its pages, and it even provides an API for that. However, the API is quite restrictive in many areas, such as limiting you to data from specific dates — no historical data scraping.

For most people, the data provided via the official data pipeline is not enough, and thus, they are forced to make use of data using third-party options like web scraping. This is not allowed by Twitter, and it has systems in place to block you. However, the blocks can be bypassed, and doing so is not illegal in many cases.

Q. What Proxies are the Best for Scraping Twitter?

Twitter is a bot relaxed when it comes to blocking proxies from accessing its platform. For this reason, you could use datacenter proxies to scrape Twitter.

But for the best experience, you are better off using rotating residential proxies. This is because residential IPs are more legitimate, and they also get rotated frequently, which makes it difficult for Twitter to detect your activities and block you. However, residential proxies are expensive compared to datacenter proxies.

Conclusion

The data public on the Twitter platform is incredibly important to businesses, research agencies, and data scientists. If you are interested in collecting data from the platform and you have Python coding skills, you can do that easily.

Above, we discuss the 3 methods you can use to collect data from Twitter. Before you do the scraping, it is important you check the legal implication of your specific scraping task to avoid getting into trouble with the law.