Are you looking forward to extracting web data from the Internet as a Python developer, and you are looking for headway? Then you are on the right page as we will be taking you through the basics of web scraping using Python in this article.

Data is now known as the new oil, and the most important companies in the world depend on it for their decision-making processes. While there are different sources of data for making decisions, the Internet is one of the major sources that can’t be ignored.

In fact, for some industries and use cases, the Internet is the single most important source. And with the Internet, it is no longer the availability of data that is the problem; it is how to extract, clean, and use it for your data analysis and decision-making process.

The process of extracting publicly available data n the Internet can be hectic, repetitive, and even error-prone. For some magnitude of data, it is even impossible for you to collect manually. That is why we resort to using automated methods to collect data. The automated method of extracting publicly available data from web pages is known as web scraping.

This process is carried out using an automation bot known as web scrapers. There are already-made web scrapers, but you can create one yourself using your favorite programming language, such as Python programming language.

Python for Web Scraper Development

It might interest you to know that Python is one of the most popular programming languages for development bots, such as web scrapers. In fact, it is the de facto language for teaching web scraping, and you can confirm that by the number of guides utilizing it in their elementary web scraping guides. There are reasons why Python is loved by many bot developers and web scrapers. Below are some of the reasons why Python is the perfect language for web scraping.

Easy to Code and Understand: The number one reason why Python is popular for web scraping is its ease of usage and simple syntax. In fact, most people that got into web scraping without coding experience started with Python. This is because it is easy to use and takes away all of the verbose syntaxes that will confuse you as a beginner.

There are no curly braces or semi-colons anywhere, as with other popular languages, making it less messy. In fact, it is similar to reading statements in English. Its indentation also makes it easier to read.

Fewer Lines of Code: with Python programming, you right less to get more done. And this goes with the principle of automation. If you will take more time writing code than it will take you to extract data manually, then there is no point automating it. With just a few lines of code, you can achieve a lot with Python. Usually, you can do more. Web scraping tasks with fewer lines of code in Python than in any other programming language.

Large Collection of Web Scraping Libraries: Available libraries and third-party tools is also another reason why Python will also ways be a go-to language for web scraping. There are numerous libraries for anything you want to achieve. The popular ones include Requests for sending web requests and BeautifulSoup for traversing parsing data of interest from downloaded pages. There is also a huge library for data analysis, such as Pandas, Numpy, and Matplotlib,

Huge Community: One of the advantages of being a popular programming language for web scraping is that there is a huge community supporting it. There is no issue that you will experience now that others have not, and as such, it is easier to get help with Python when web scraping.

How to Carry out Web Scraping with Python

As with all other tasks in programming, there are steps you need to follow in other to get this done. If you follow the steps correctly, you should be able to write an algorithm that will go through the steps required to extract data of interest of the web pages you want. Below is the steps you should follow in other to carry out a successful web scraping operation using Python.

Step 1: Brainstorm on a Web Scraping Project

You can’t learn how to code web scrapers aimlessly. You will need a focus, and that focus will be the data you want to scrape. In this guide, I want to make the project so simple and easy to understand. So, the project we will be working on will be a web scraper that extracts all of the URLs on a page — simple. The web scraper won’t be an advanced web scraper and won’t be doing anything legendary. Just give it a URL, and it will return all of the URLs present on the web page of the URL you provide.

We won’t be handling exceptions or any kind of error. This will just be a proof of concept. It is also important you know that the web scraper will only work on static pages. Web pages that depend on JavaScript rendering to display content won’t be supported.

Step 2: Install Python and Associated Web Scraping Library

Usually, most computers come with Python installed. But the version installed is Python 2. This is not the current version, and you will have a hard time coding web scraper with it because of the lack of availability of libraries that support it. You will need to install the current version of Python 3. Go to the official Python website to download and install the specific installer for your Operating System (OS).

Next, you need to install the associated libraries for web scraping. Python comes with networking support and a library known as the urllib for handling HTTP requests. It also does comes with its own parser. However, these are not beginner friendly. To make things easier for you, we will recommend you make use of easier-to-use third-party libraries.

The duo of Requests and BeautifulSoup will get the job done. Requests is an HTTP library, while BeautifulSoup is for extracting specific data points from pages downloaded using Requests. Since they are third-party libraries, you will need to install them. You can use the pip install command to install these 2 libraries.

Run the command below in your command prompt to install Requests

pip install requests

Run the command below to install BeautifulSoup

pip install beautifulsoup4

If the installation is successful for both, you can move to the next step.

Step 3: Choose a Target URL

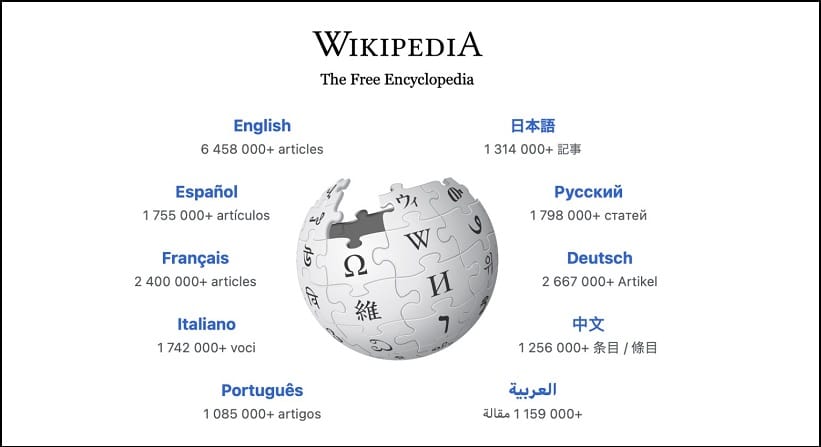

We have chosen a simple project to work on. But we also need a specific web page to use for the guide. Let use the Wikipedia home page as a sample URL — https://www.wikipedia.org. You can use any URL of your choice. The task here is simple, and that is why I choose the Wikipedia home page.

We are going to be scraping all of the URLs found on this page. If we had wanted to make the bot advanced, we could scrape only URLs that meet certain criteria or even convert it to a crawler where it visits each of the scraped URLs and scrape the URL on it, and the process goes on. As you can see, it is from web scrapers that web crawlers are made. The first step in web scraping actually involves sending web requests. Below is how to send a web request in Python using the Request library.

import requests

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36"}

response = requests.get("http://www.wikipedia.org", headers=headers)

print(response.text)As you can see from the above, we use the get method from requests to download the content of the https://www.wikipedia.org page. We added a header so we could add a custom user-agent string to trick our target into thinking we are using a real browser. If you run the code above, you will see the HTML of the Wikipedia homepage printed on the screen — using the print function.

Step 4: Inspect the HTML of the Page

Web scraping entails extracting specific data from a page. Let’s say extracting price data from a product page or comments from a forum discussion. On this page, all we want is the URLs on the Wikipedia page. The first step is to inspect the source code of the page. To do that, right-click on the page and click on “View page source”. You will see something like the one below.

If you look at the HTML code above, you will see that the URLs are enclosed in the a tag. Below is one of the sample tags.

<a id="js-link-box-pt" href="//pt.wikipedia.org/" title="Português — Wikipédia — A enciclopédia livre" class="link-box" data-slogan="A enciclopédia livre"> <strong>Português</strong> <small><bdi dir="ltr">1 085 000+</bdi> <span>artigos</span></small> </a>

To extract the URLs from the page, check the code below

import requests

from bs4 import BeautifulSoup

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36"}

response = requests.get("http://www.wikipedia.com", headers=headers)

soup = BeautifulSoup(response.text)

links = soup.find_all("a")

for link in links:

print(link.get("href"))As you can see, if you run the code, you will see the URLs printed on the screen.

Step 5: Refine the Code

Let’s restructure the code into a function that accepts a URL as an input and spite out the URLs on the page as output.

import requests

from bs4 import BeautifulSoup

def scrapeURLs(url):

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36"}

response = requests.get(url, headers=headers)

urls = []

soup = BeautifulSoup(response.text)

links = soup.find_all("a")

for link in links:

urls.append(link.get("href"))

return urlsProblems Associated with Web Scraping Using Python

You can see how simple it is to scrape data from the Internet with Python. But what are the specific problems you will run through when scraping with python?

Performance Issues

The major issue you’ll deal with when scraping with Python is actually a performance problem. Python is not fast likewise its associated libraries. The Multithread support of Python is also not a full-fledged one compared to the likes of Java. For most users, though, the speed is not a problem. But if you are dealing with a project where performance is a major concern and you need to milk out as much bare metal performance as you can, then Python is not the language for the job.

IP Blocks

This problem is not exclusive to Python web scrapers. All web scrapers have to deal with this. Web scrapers, by design, send too many requests within a short period of time. Unfortunately, most websites do not support this and, as such, have request limits baked into their anti-spam system. After a few requests, web scrapers get blocked, except they take a conscious effort to bypass this. Most anti-spam systems depend on one’s IP address for identification. With the help of rotating proxies, you can hide your real IP address and get as many alternative IPs as you want for your tasks without getting blocked. Bright Data and Smartproxy are some of the best providers out there.

Captcha Interference

Sometimes, the block is not as stiff as that of the IP block. If a website suspects you’re accessing it via automated means, it is through Captchas at you to solve. This will throw most basic web scrapers off guard.

However, if you are experienced with computer vision, you could code an automated captcha solver to deal with this. There are also captcha-solving services with APIs you can utilize to get captchas solved automatedly. 2Captcha and DeathByCaptcha are good options.

Conclusion

Unlike in the past, there are already-made web scrapers that you can. Use without writing a single line of code. This means that learning how to code in Python in other to develop your own web scraper is no longer needed. However, if you have a custom need or need to integrate a web scraping logic into your software, then learning how to code web scrapers in Python is key.